Hey, I want to get this writeup out quick, before I lose the excitement about this topic. That would be really bad, because this is the most important nutrient for human biology - maybe not objectively (hey, oxygen and protein still exist), but conditionally due to our insane modern lifestyles that almost completely rob us of this nutrient. Anyway...

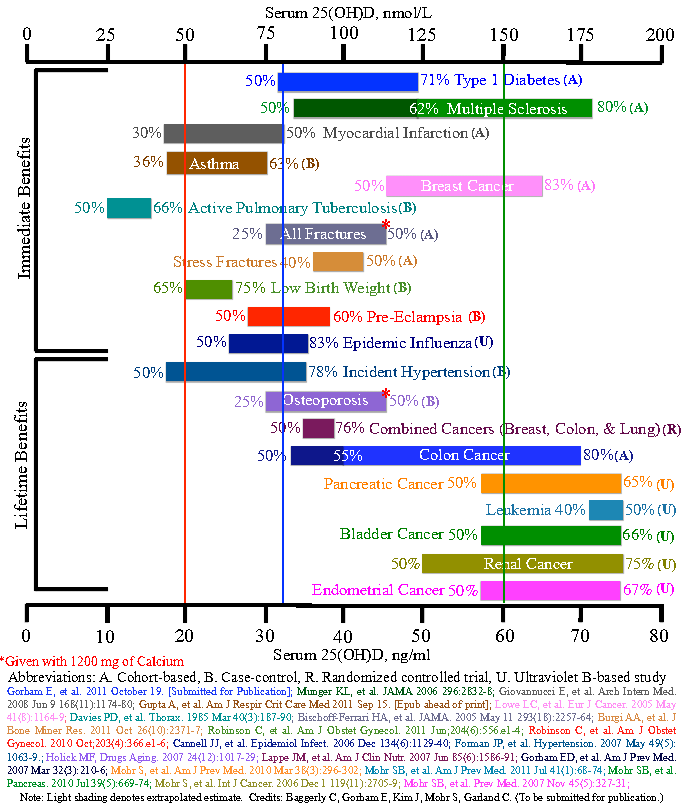

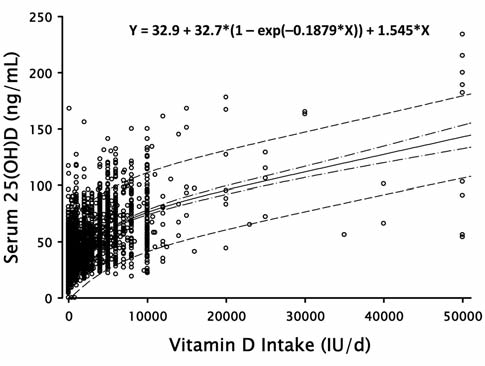

UPDATE December 2024: new chart. However, the rest of this section will pretend it's the old one, as all my calculations were done against it. Keep this in mind whenever you see statements like "40 ng / ml prevents whatever disease" as the current values might be higher. In particular realize that the maximum amount of blood vitamin D that is known to improve disease resistance is now 75 ng/ml or 187 nmol/L, and not the previous 54 and 125, and even more could end up proving beneficial when additional studies are done. These new values pretty much ensure that absolutely no one has enough without huge doses of long term supplementation. Here is the old chart, by the way.

The more blood vitamin D a person has, the lesser their probability of contracting a disease such as cancer or diabetes; suffering from a fracture or a heart attack. Though many nutrients have such associations, there is no other that affects the human body in such a fundamental way. vitamin D is special for one more reason, too, but we'll come to that later. You're probably now asking yourself the question of why should we care?

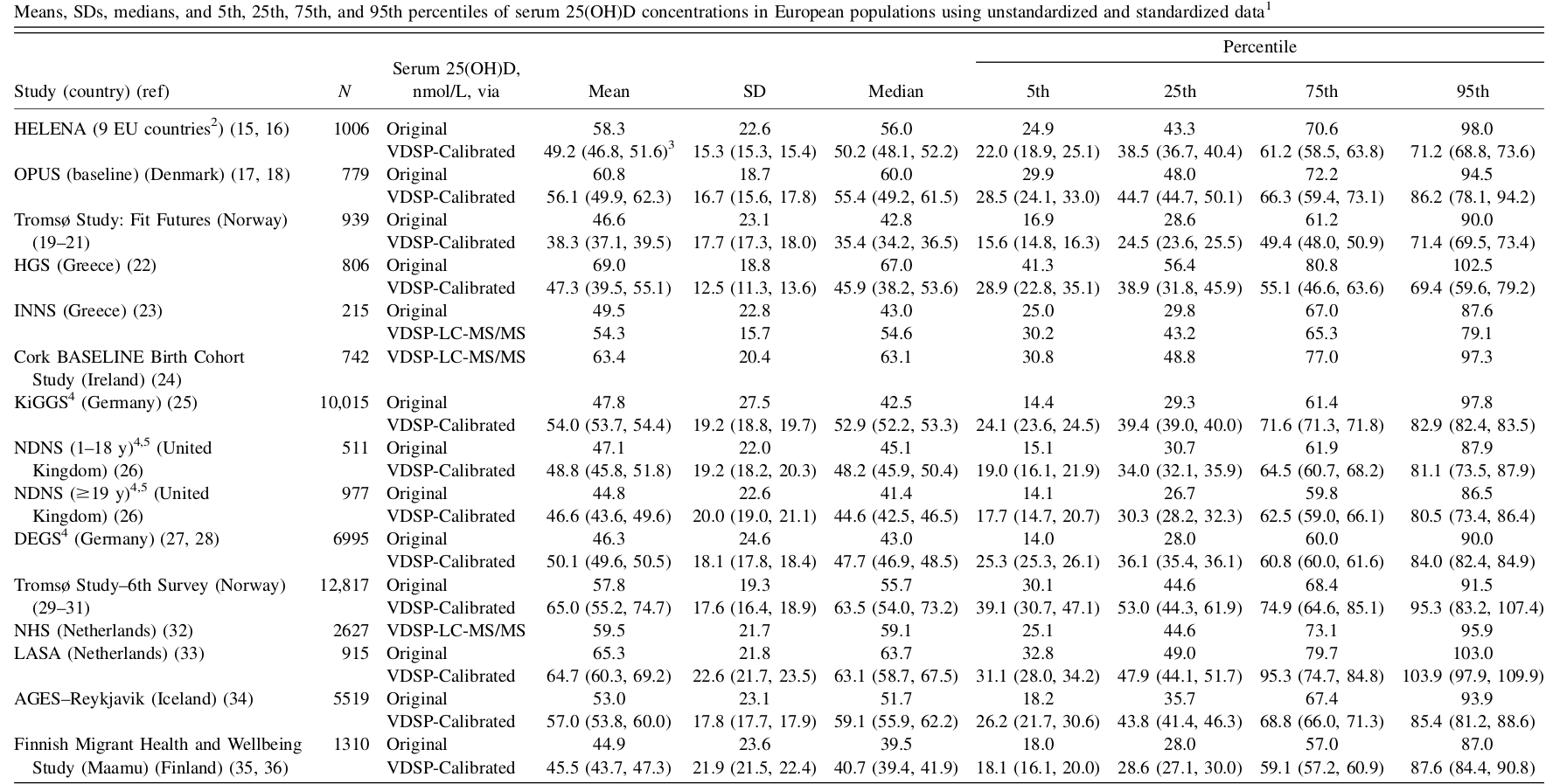

I mean, we surely get enough of vitamin D through our regular lifestyles, right? Actually, nothing could be further from the truth. Everyone is deficient! Let's jump right in for the proof (local):

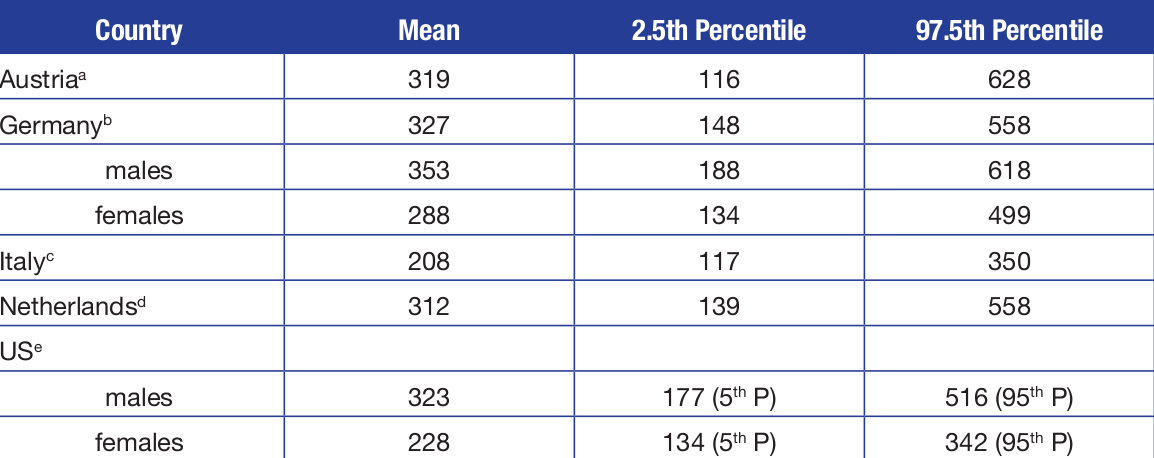

The 95th percentile

column is the one that interests us the most; it lists the blood amounts of vitamin D that 95% of people in a certain country do not reach (values will be rounded, to make it easier to read). The reason we're looking at it and not the average is because it allows us to find the best case scenario. If even the "best" is terrible, then the worse ones are sure to be even worse. And so - in 15 studies and 16 different countries, the highest "best" result reached was the Netherlands' 104 nmol/L (or about 42 ng/ml). Meaning everyone there fails to maximally prevent various cancers and diabetes. But - since the average in even that "best" study is only 65.3 nmol/L (or 26 ng/ml) - many of the participants fail to even prevent heart attacks or fractures - not just diabetes and cancers. All other countries fare worse - and some much worse. Germany or the UK - for example - don't even pretend to be healthy, getting absolutely ravaged by all the listed diseases, and sometimes even rickets with their sub 20 ng/ml averages.

As the disease incidence chart showed us, the blood level of vitamin D that prevents all of the listed diseases is 54 ng/ml or 135 nmol/L. Something none of the European countries come even close to reaching. For the 5% of remaining people that have more vitamin D levels than listed in the 95th percentile

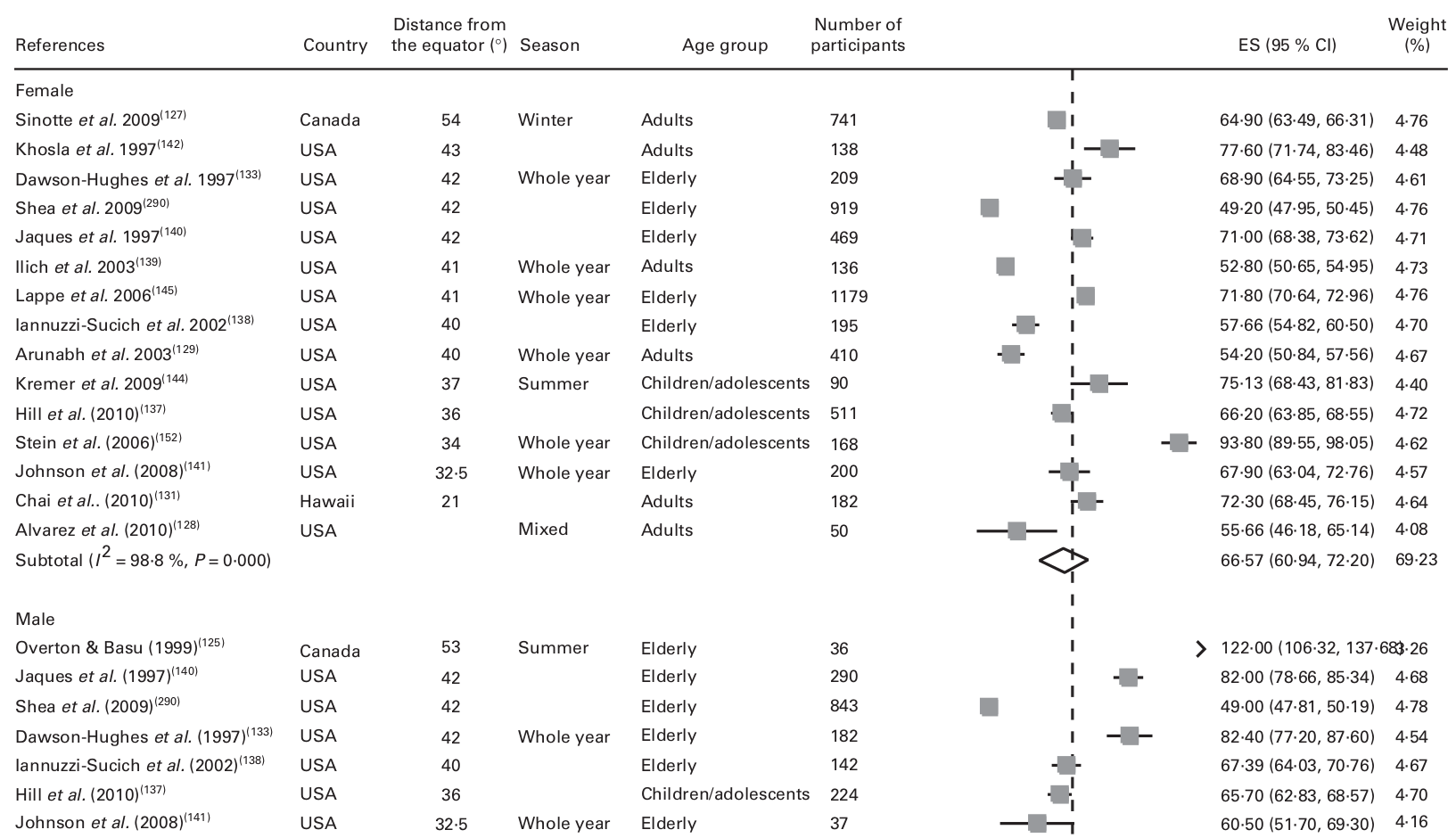

column, it is doubtful they gain the 13 ng/ml required to reach sufficiency. And that's considering only the best case scenario - the Netherlands study. In other countries, those outliers would need like 20 ng/ml more. Therefore, we can safely say that everyone living in Europe is deficient. Note: some evidence actually shows that other diseases might require even higher Vit D levels, but we will stick to these for now - since they alone are enough to prove our point. What about other regions? It turns out that it's not just Europe that's deficient, but the entire world. I suspected it all along but I did not have good data proving it at first, but now I found it (local). Look at the data from North America:

There is one study - Overton and Basu (1999) - with a 122 nmol/L (49 ng/dl) Vit D average, which is really good. Kind of a low sample size and it's in the summer, meaning the level will be falling later. But okay, we'll accept it as a study which shows that at least some people in Canada are almost at the optimal level (though still failing to prevent multiple sclerosis and type 1 diabetes completely). The rest of the studies fare terribly though, as expected. Looking at the ES (95% CI) column again, we can see that the second best study is Stein et al. (2006) in which 95% of people have a vitamin D value lesser than 98 nmol/L (39 ng/dl). Notice how there are way more participants there, and it's based on levels from the entire year - so it's more reliable. In addition to the already mentioned, we can add many cancers to the list of diseases it fails to cover. And that's the second best! The third best is Dawson-Hughes et al. (1997) where 95% of people have vitamin D levels lesser than 82 nmol/L (33 ng/dl). Adding worse performance against fractures and many cancers. After that, we reach into joke territory with most studies showing values of <72 nmol/L (29 ng/dl) and some even under 60 - where we can add, for example, heart attacks to the list of vulnerabilities. The worst cases - those 49 nmol/L average ones - even fail to completely prevent rickets. Clearly the North American region won't be our savior. Let's try Asia+New Zealand then:

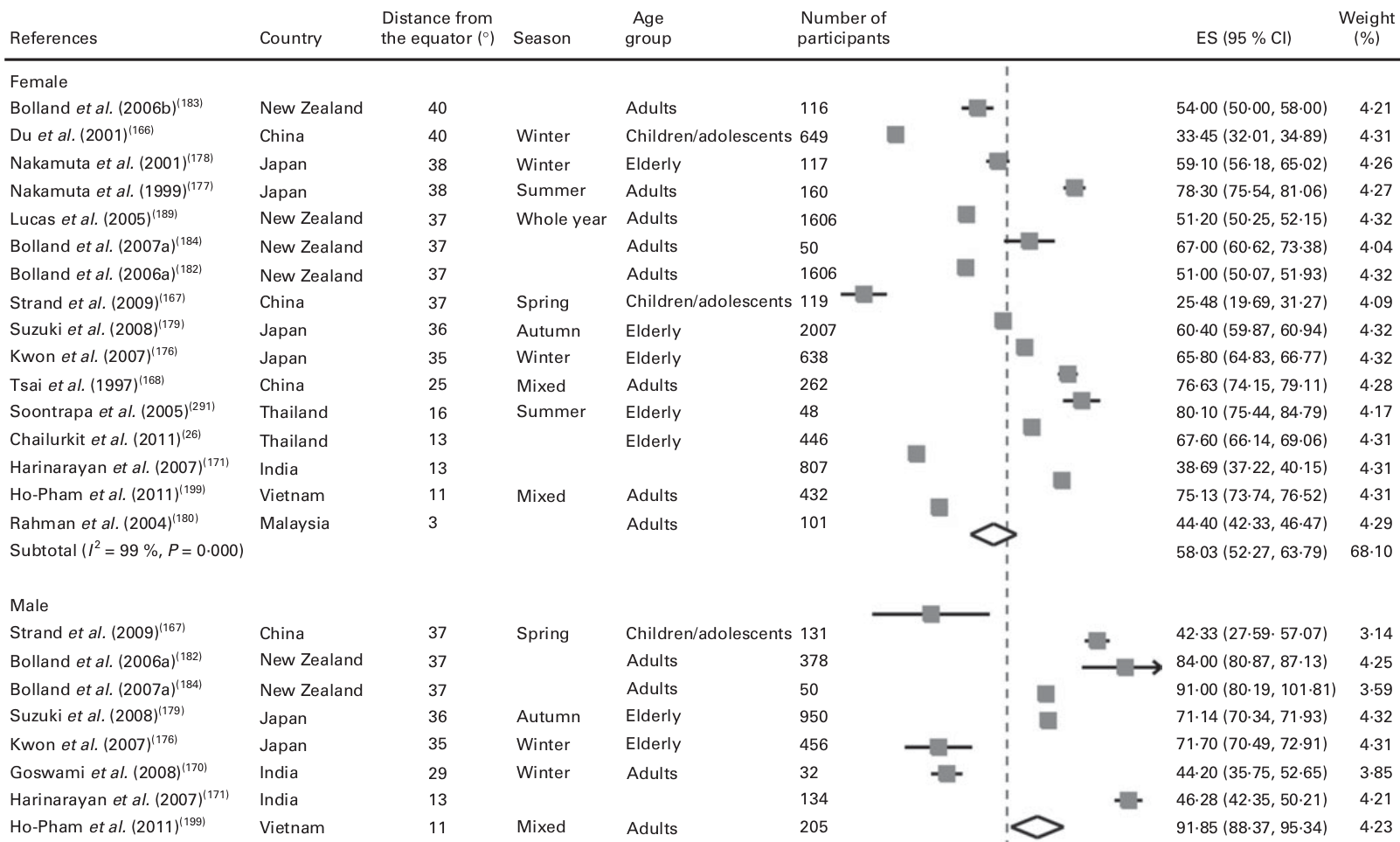

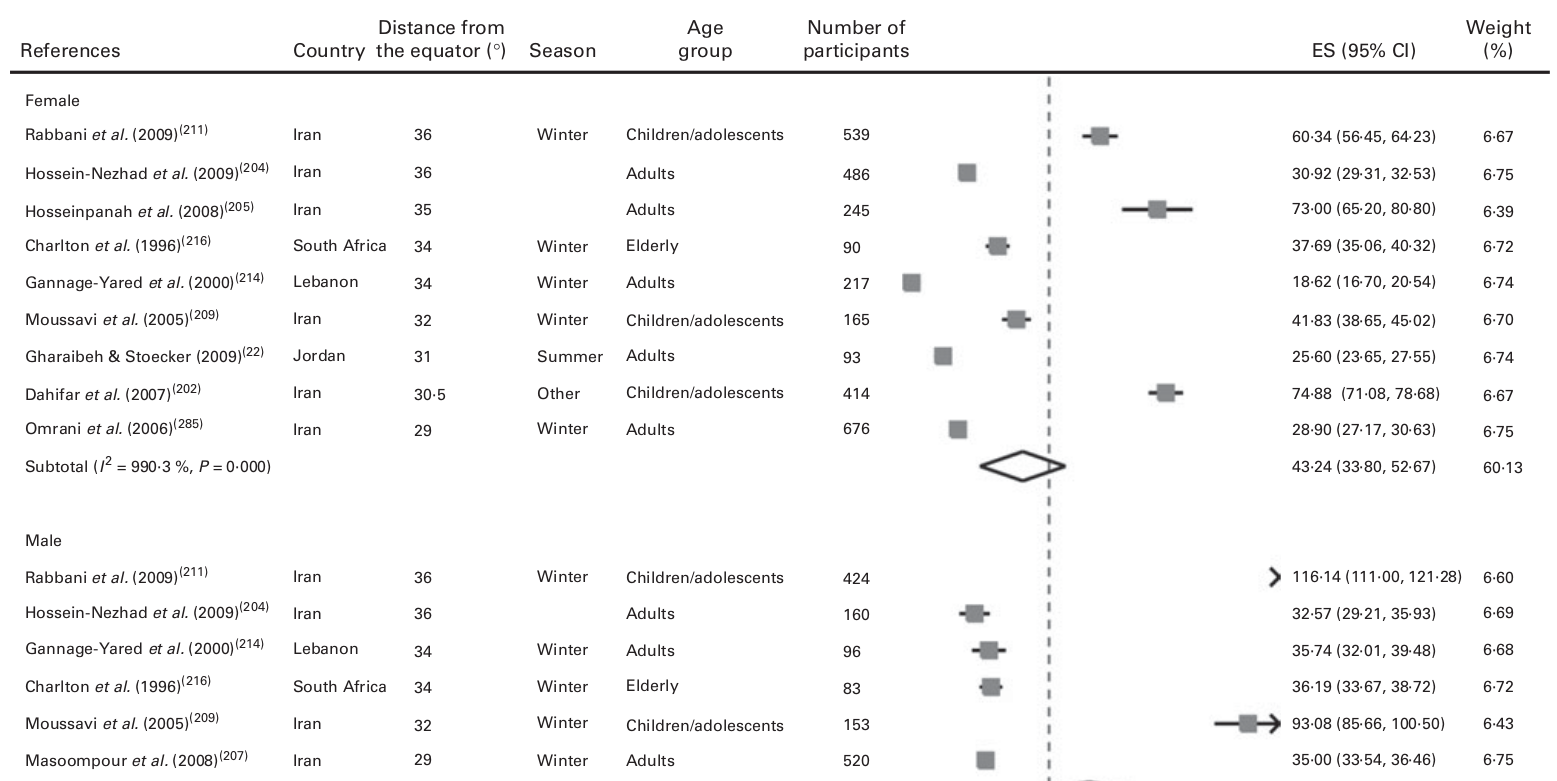

You should know how to read these by now. The strongest study is New Zealand's Bolland et al. (2007), where 95% of people have vitamin D values of less than 102 nmol/L (41 ng/dl). This still isn't optimal (again succumbing to cancers, diabetes, MS, fractures), and it's the best we've got in Asia. The average is higher in Vietnam's Ho-Pham et al. (2011), but the peak is a lot lower. Most of the rest of the studies do terrible, even worse than the USA ones. There are three Indian studies, and all come out lower than 50 nmol/L (20 ng/dl - corpse level!). China doesn't do much better; though one study does have an average of 77 nmol/L (31 ng/dl), the others are 33 and 25 nmol/L (13 and 10 ng/dl - the corpses are barely twitching there). Japan way outclasses China, with one study showing 78 nmol/L average (31 ng/dl), two about 70 (28) and the others about 60 (24). All that means is that it's not ripped apart by rickets - but still dies from a heart attack. Is that all Asia has to offer? A third league competition, where the winner earns the prize of a bunch of diseases; while the follow-ups just die from heart attacks. While USA / Canada has two studies that just barely get affected by rickets, this region has eight and some very heavily so! Hey, maybe we'll find more fighting spirit in Africa, so let's fly there:

One big outlier in Rabbani et al. (2009), Iran; decent sample size - and in winter too! Wow, so it's possible to be not deficient all year after all, with a 116 nmol/L (46 ng/dl, very close to optimal!) average. But, this still doesn't fully cover diabetes or MS. And, as expected all the others fail - even in Iran itself, with one study having 93 nmol/L (37 ng/dl - now succumbing to all cancers and fractures) average, one 75 (30 - adding heart attacks), one 73 (29), one 60 (24 - adding falls), one 41 (16), one 31 (12) and one 29 (12; wow, the zombie apocalypse is approaching!). Other countries are even worse; Lebanon, Jordan - can those zombies even still crawl with values of 10 ng/ml or less?! Out of 16 studies, only five manage to prevent rickets completely. Wow! If Asia was a third league competition, Africa must be the children's one. Keep in mind this analysis divides by sex, so sometimes a single study can get very different results for male and female participants (Rabbani et al. (2009) and Moussavi et al. (2005) - huge differences!). And that happens because they are Muslims, and women cover themselves up, preventing vitamin D creation from the Sun. But even the males do terribly here, overall.

Please come back to the disease incidence chart and check what all those results mean. Cancers e.g need 40+ ng/dl (100 nmol/L) to be prevented, and only those two massive Canada and Iran outliers even reach that level! And that's not even the end of the chart! Yes, even our best result - the Canada one - fails to beat the final boss of multiple sclerosis, which requires 54 ng/ml or 135 nmol/L. How sad is it that we have to be happy about those still suboptimal results? And those are extreme outliers, remember; most others are barely hanging on there, being ripped apart by cancers and fractures (even the "second best" results!). And we even have a bunch of corpses with <20 ng/ml that must have surely had their bones devoured already.

By the way, I did not re-analyze Europe, though this study does have data on it, too. Because I did it already and nothing has changed, they're just as deficient in here. Also, for transparency's sake, there are more studies that weren't included in the images, because the authors decided to avoid those that lacked the 95% CI or separation by sex. Some of those Thailand ones, with their 120+ averages, are impressive indeed; but they have two values listed there - and the second ones are a lot lower, so I don't know what to think. If we take the first ones at face value, one of the Thailand ones wins the game with a 168 nmol/L average. Hooray! But the additional studies also add way more <30 ng/ml corpses into the mix, so the overall situation becomes even worse. And we have no idea those values are even reliable; at least, the study doesn't say why there are two listed for those particular studies.

So let's recap. Piece of the puzzle #1: the blood vit D amounts Earth residents are having. Piece of the puzzle #2: the blood vit D amounts necessary to prevent disease. #2 is (much) higher than #1, therefore people do not have enough vitamin D in their bloods to prevent disease.

If we accept we don't have enough, then the next step is to figure out how much we actually need to ingest to reach the necessary blood amounts. Fortunately, we have a study (local) that tested just that:

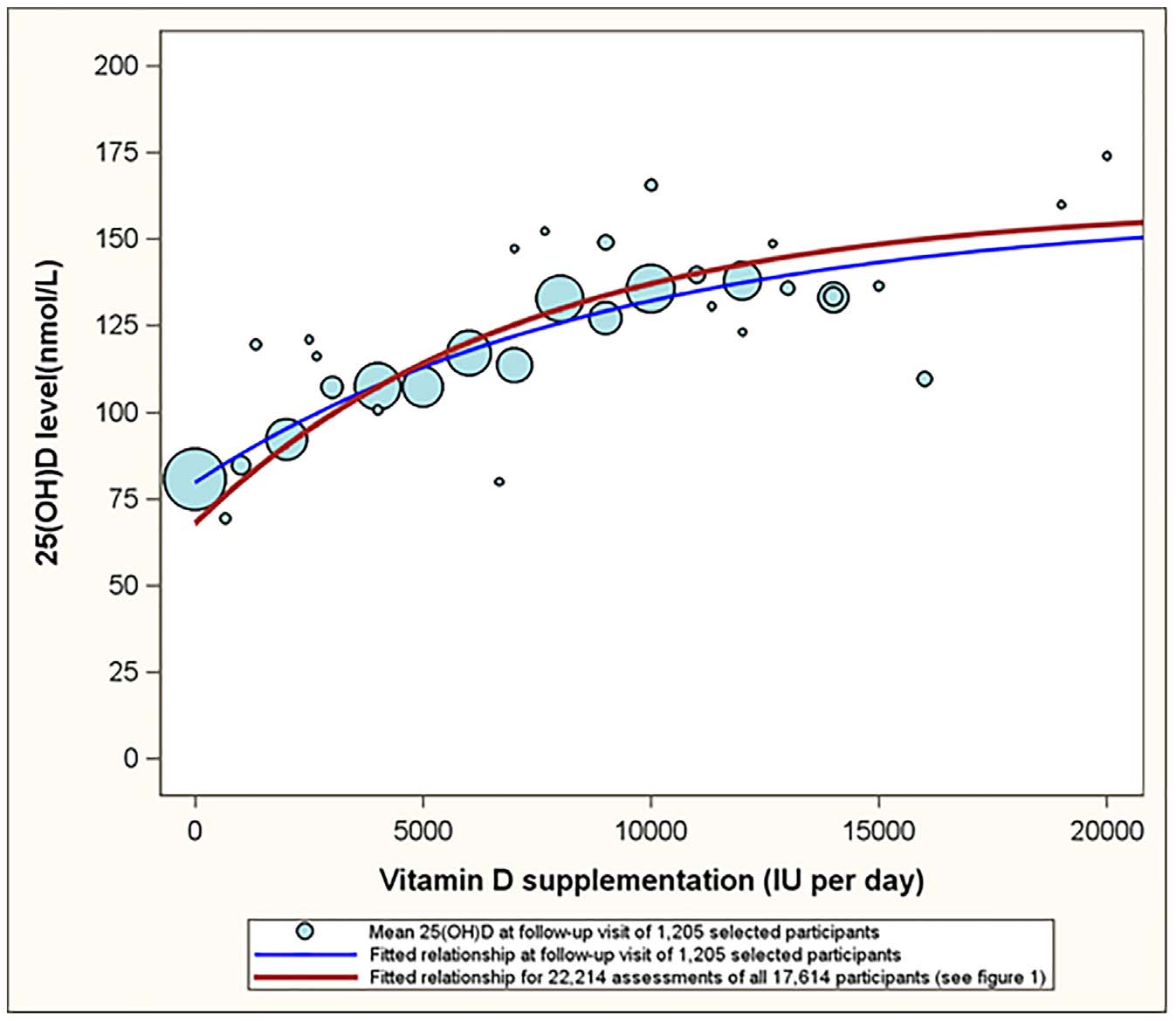

For Figure 2 we restricted our analyses to those subjects that had both their baseline visit and a follow up visit between January 2009 and June 2013 and had reported not to supplement with vitamin D at their baseline visit (1205 subjects, 2410 assessments). This analysis mimics a pre-post comparison of an intervention: a comparison of observations prior to introduction to vitamin D supplementation with observations, on average, 0.98 years after the baseline visit. As such, the blue bubbles in figure 2 represent the expected 25(OH)D level of participants who have been taken oral doses of vitamin D for an average of 0.98 year since baseline.

So they took 1205 people that haven't been taking a vitamin D supplement before, and checked their blood levels of vitamin D after a year of supplementation. Simple and beautiful; and it tells us that if you want the optimal (50 ng/ml or 125 nmol/L in this graph) level of Vit D, then you need to take at least 8000 IU per day for a year. Going higher than 10000 doesn't appear to make much difference. The full study tested many more people and there, some weaker results start appearing:

But, maybe those weaker results just didn't take it for long enough, since there is no duration mentioned here - unlike the earlier graph, where it was specifically one year. Either way, 10000 IU per day reaches optimal (50 ng/ml or 125 nmol/L in this graph) levels in the vast majority of cases even here. There's also this caveat: These recommendations appeared 2 to 3 times higher for obese participants relative to

normal weight subjects, depending on the 25(OH)D target level

. So, maybe those weaker results were just obese. You need to take into account your weight before undertaking a supplement regimen; you might be easily not getting the results you think you should be getting if you are taking an amount meant for someone with lesser "baggage". E.g your ingested 6000 might effectively become 2000 - a puny dosage. Anyway, let's try to confirm these results with another study (local):

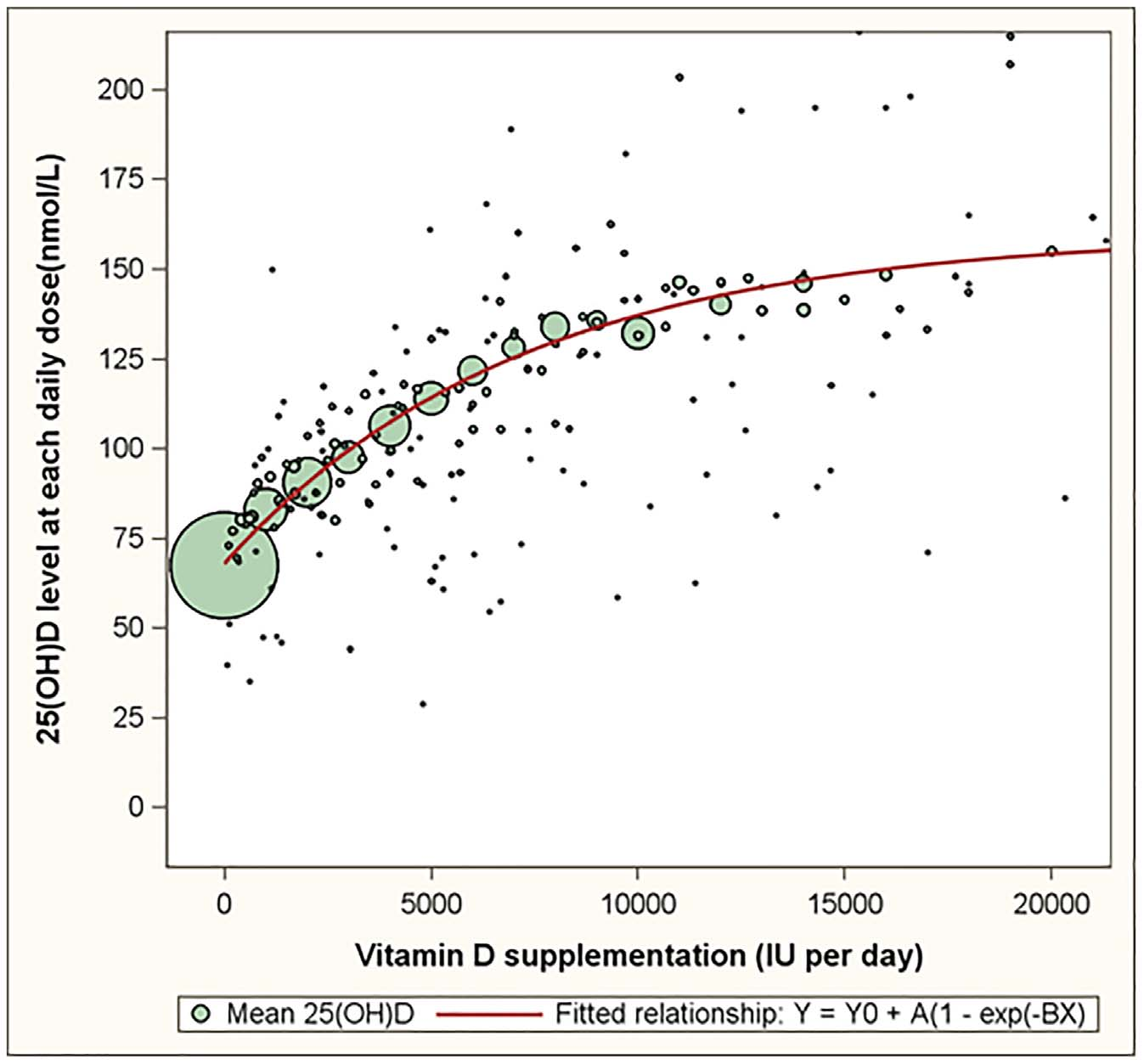

A little weaker results, it seems. Keep in mind that this study was different in a few ways, such as:

There were no exclusion criteria, and participants included both genders and a wide range of ages, nationalities and levels of health status.

The previous study recruited only healthy adult volunteers

. And so, the slightly weaker results here might simply be explained by lesser response to vitamin D3 because of bad health (chronic disease, maybe digestive, etc) of some of the participants. However, even in this study, 10000 IU per day achieved great results:

The supplemental dose ensuring that 97.5% of this population achieved a serum 25(OH)D of at least 40 ng/ml was 9,600 IU/d.

12-13000 appears to hit 50 ng/ml (125 nmol/L) more reliably, but note the lesser sample size. Maybe we should consider 10k to be the right dose for healthy volunteers

, and 12-13k for the more unhealthy ones. Either way, we do have a pretty tight range, so I consider both studies to work in concert. And so, we have just added puzzle piece #3: the amount of vitamin D we need to ingest per day for optimal blood levels.

But is that amount safe? Briefly: yes, absolutely zero danger. Quoting from the studies cited above:

Participants reported vitamin D supplementation ranging from 0 to 55,000 IU per day and had serum 25(OH)D levels ranging from 10.1 to 394 nmol/L.

We did not observe any increase in the risk for hypercalcemia with increasing vitamin D supplementation.

Universal intake of up to 40,000 IU vitamin D per day is unlikely to result in vitamin D toxicity.

Although this data set provides no information with respect to serum or urine calcium values in these individuals, at the same time it is clear that there were no clinical evidences of toxicity

No toxicity detected for 40000 or even 55000 (!) IU per day - while we're taking only 10000 or a little more. We can include safety as our puzzle piece #4. I've also explored the topic of vitamin D safety in much more depth in my Wikipedia report (start reading from The entire "Excess" section is a sham

).

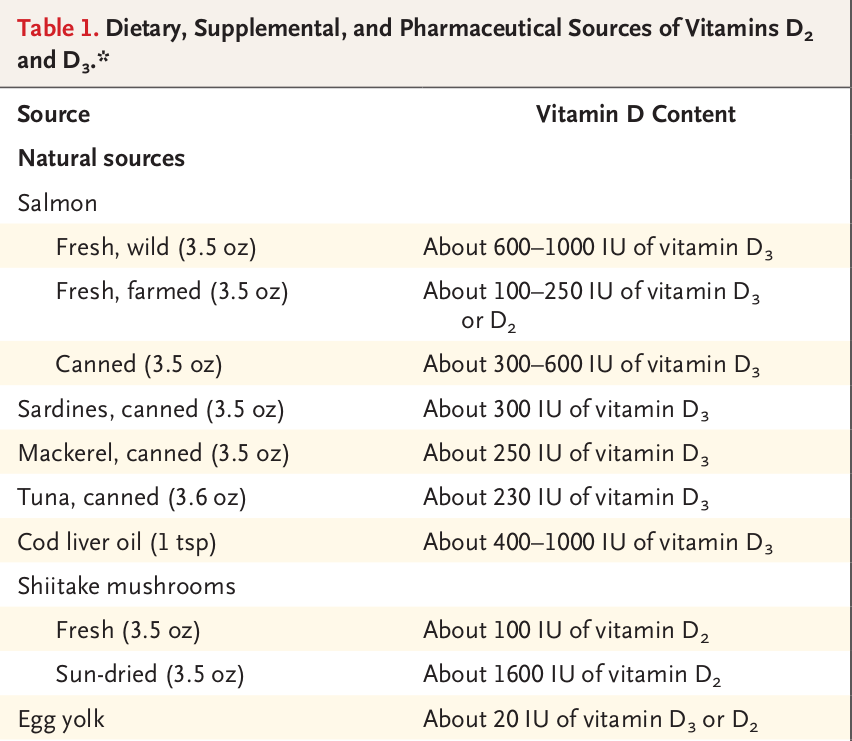

Where do we get that amount from, though? Surely, we've all heard our beloved governments pretend food is a source. Tl;dr version: animal products have too little, while the mushroom version is different and doesn't work as well. Look here (local):

Even if you formulated your entire diet out of the above mentioned ones, I doubt you'd be able to get an amount needed for therapy (eg 10k). In realistic circumstances, I bet you couldn't manage to get even 2000 IU per day. You'd need to shove 600g sardines down your mouth without getting tired of it...every day! What about the CLO? One teaspoon gives 700 IU average, so just take more, right? Well, first of all, that would quickly get expensive; Moller's CLO costs 22 euro (archive) (MozArchive) per 250ml, and to get 2000 IU vit D you'd need to take 25ml. Not everyone has 66 euro to blow every month - and this is for the bare minimum amount of Vit D that's useful to take. 5000 - for example - would cost you 165 euro per month. And second - even if you were willing to spend that much money - CLO is full of PUFA, and taking that much is very very dangerous. You'd give yourself more serious disease with that much CLO than with a lack of vitamin D. Therefore, either way, you would just keep degrading health-wise if you relied on animal products as your source of vitamin D - since the 2000 IU per day that you could realistically get from fish can only sustain a low blood level - and CLO is toxic in the amounts required. However, the mushrooms - actually - have a lot more than it seems. Though the above image lists only 1600 IU in 100 grams of sun-dried mushrooms, which is not that impressive (remember that the dried ones weigh less, so that 100g is equivalent to like 700g of fresh) - I was just reading this paper (local) which turned the whole issue around for me. The first thing that caught my attention was this:

At midday in mid-summer in Germany, the vitamin D2 content of sliced mushrooms was as high as 17.5 μg/100 g FW after 15 min of sun exposure and reached 32.5 μg/100 g FW after 60 min of sun exposure

So all I need to do is put a few of the widely available button mushrooms outside for an hour and I get 1300 IU additional vitamin D per day. Still little, but at least I can use that as a supplement to my supplement - and maybe skip the supplement on the days I'm outside more. The really interesting part came right after the above:

An unpublished Australian study on whole button mushrooms determined the vitamin D2 content after exposure to the midday winter sun in July in Sydney (personal communication, J. Ekman, Applied Horticultural Research, 12 August 2013). Sun exposure to a single layer of small button mushrooms was sufficient to generate 10 μg D2 / 100 g FW after 1 h, while large button mushrooms took 2 h to generate the same amount of vitamin D2.

Remember the vitamin D winter (a time when humans cannot generate vitamin D from the sun)? Well, mushrooms say "fuck that, we're superior to you pathetic humans" and keep producing regardless. There's still one little problem, namely that the amount is just not satisfying. Assuming you were able to eat 150g of solar activated mushrooms per day, that's still only about 2000 IU of Vit D - which might keep you from going seriously deficient, but isn't enough to sustain a healthy level. However, the mushrooms have one more trick up their caps yet:

Sun-drying is one method used for drying mushrooms in Asian countries. Analysis of vitamin D2 and ergosterol content of 35 species of dried mushrooms sold in China revealed they contained significant amounts of vitamin D2, with an average of 16.9 μg/g DM (range of 7–25 μg/g DM [48]). No details were provided on the method of drying, nor the time since the initial drying. The moisture content of the commercial dried mushrooms varied, although the majority contained 3–7% moisture.

Wow! An average of 680 IU Vit D per GRAM! of dry mushrooms is fucking insane. Since they tested 35 species, presumably all mushrooms are capable of this - including the common white button. So, the earlier low results were just because they didn't let them sit in the sun long enough. If you do (which is required for them to dry), then you get an absolutely insane amount of vitamin D. Just 10 grams of the dried mushrooms (equivalent to about 70 grams fresh, or two button mushrooms - Commercial dried mushrooms [...] have about 15% of the original weight of fresh

mushrooms

) gives you almost 7K IU Vit D per day average. And since they are dried, it preserves well:

Three types of mushroom (button, shiitake, and oyster) exposed to a UV-B lamp and then hot air-dried, had relatively good retention of vitamin D2 up to eight months when stored in dry, dark conditions at 20◦C in closed plastic containers

Though this quote speaks about hot air

drying, I don't see why that would make a difference. Here's one more source (archive) (MozArchive) with similar results, for good measure:

The third set of mushrooms was dried outdoors in the sunlight with their gills facing upwards for full sun exposure. The most vitamin D was found in shiitake dried with gills up that were exposed to sunlight for two days, six hours per day. The vitamin D levels in these mushrooms soared from 100 IU/100 grams to nearly 46,000 IU/100 grams

Again preserving well:

Most interesting to me is that when we tested our mushrooms nearly a year after exposure, they preserved significant amounts of vitamin D2.

Is this the cheap, natural, DIY vitamin D supplement we've all wanted? Well, there's one catch: it's vitamin D2 and not D3 - though how much this actually matters is debated (I'll research it soon). UPDATE: I did research it, and found some beneficial effects of vit D mushroom ingestion (animal studies, because sadly, I cannot find a single human one that bothered to test relevant dosages):

Bone building (archive) (MozArchive):

Femur BMD [bone mineral density] of the experimental group was significantly elevated compared to initial femur BMD of the study group.

Scaled to weight, the vit D dosage used in this study would be equivalent to about 10000 IU in a human.

Immune system activation (archive) (MozArchive):

Plasma 25-hydroxyvitamin D (25OHD) levels from UVB-exposed mushroom fed rats were significantly elevated and associated with higher natural killer cell activity and reduced plasma inflammatory response to LPS compared to control diet fed rats.

This study used 600 IU, but still somehow had positive effects.

Improvement in metabolic markers (archive) (MozArchive):

A significant decrease in serum triglycerides (from 103 to 75, 69 and 72 mg/dL), total cholesterol (from 267 to 160, 157 and 184 mg/dL), and LDL cholesterol (from 193 mg/dL to 133, 115 and 124 mg/dL) along with an increase in the HDL/LDL ratio, and improved glucose levels were documented.

Prevention of liver damage (local):

that the proportion of severe liver injury (defined as ALT >2000 U/L) was 100% at the first three groups (untreated, vitamin D, and nonenriched mushroom extract), but was dramatically decreased to 0% at the enriched mushroom extract-treated group, demonstrating the synergistic effect of the two different treatments, from the second experiment.

If I understood it right, the mice were fed the mushroom meal 3 times per day, with 1.125 IU vit D per meal, meaning 3375 IU overall. The mushrooms were also shown to work better than synthetic supplements.

So, those are some of the positive effects I've found. However, I cannot in good conscience fail to mention the fact that the mushroom version of vitamin D is absorbed and utilized worse (archive) (MozArchive) in humans:

After standardizing to 100,000 units of drug, increases after cholecalciferol (2.7 ± 0.3 ng/ml) were more than twice as great as those from ergocalciferol (1.1 ± 0.3 ng/ml)

This has been shown over (archive) (MozArchive):

Both produced similar initial rises in serum 25OHD over the first 3 d, but 25OHD continued to rise in the D3-treated subjects, peaking at 14 d, whereas serum 25OHD fell rapidly in the D2-treated subjects and was not different from baseline at 14 d.

And over (archive) (MozArchive):

Subcutaneous fat content of D2 rose by 50 μg/kg in the D2-treated group, and D3 content rose by 104 μg/kg in the D3-treated group. Total calciferol in fat rose by only 33 ng/kg in the D2-treated, whereas it rose by 104 μg/kg in the D3-treated group.

Meaning, the D2 you ingest will increase your blood levels by less, and also get used up faster, than the equivalent amount of D3. There are also indications (archive) (MozArchive) that D2 cannot substitute for all the things vitamin D3 does:

Surprisingly, gene expression associated with type I and type II interferon activity, critical to the innate response to bacterial and viral infections, differed following supplementation with either vitamin D2 or vitamin D3, with only vitamin D3 having a stimulatory effect.

This study is really the one that made me reconsider the reliance on mushrooms for vitamin D intake. Because, we can kind of deal with the lesser increases or faster disappearance of vit D by just taking more of it every day. But if it doesn't actually fulfill all the needed jobs, that's a tougher obstacle to clear. And for me, immune support was the main reason for recommending vitamin D in the first place. Unfortunately, it appears that the mushrooms don't do as well in terms of it, in comparison to the Sun.

I really wanted the mushrooms to be viable, but they might not be that great, after all. They do contain a lot more vitamin D than the animals, in addition to their wide availability, DIY nature and good preservation capability. However, so what if they cannot perform all the needed jobs? Which is what would make sense, since D3 is the form we naturally make when exposed to the Sun's rays and have adapted to it through millions of years of wild living. Very little has been as constant during the development of life on Earth as the Sun, and we should expect our biology to be heavily reliant on it. Now, it's still theoretically possible that the mushroom version will eventually reveal itself as an adequate replacement for the Sun one, but it's going to take some really convincing research to show that - one that doesn't exist at the moment. Though it has been shown to fulfill some of the relevant jobs, we can't be sure that it can do all of them - and there are already indications that it can't.

To be quite honest, almost all the vitamin D2 research that is currently done is on the synthetic version, which has already been shown to matter in that liver study I mentioned. And though this is a mistake that should be remedied, we can't assume that's going to resolve all the problems. That would be shooting in the dark and going against millions of years of evolution. In the end - though supplementing with the mushroom powder should be safe - I can't in good conscience recommend it as a total replacement for the Sun. Maybe it's time to realize that the problem is our unnatural living conditions, and that the solution is just going outside.

If you want to experiment with this anyway, and use the white button mushrooms for it, then you need to cut them up first - as it's the brown insides that generate vitamin D; and by default, the cap almost fully covers them. After you're done preparing the mushrooms, I recommend grinding them with an electric herb grinder; this will heavily reduce the space taken. You can then just take a teaspoon of powder every day you need additional vit D. If you do not leave the house enough, and don't have access or don't want to take supplements, then this is still going to be much better than a complete vitamin D starvation (a reality for many people these days).

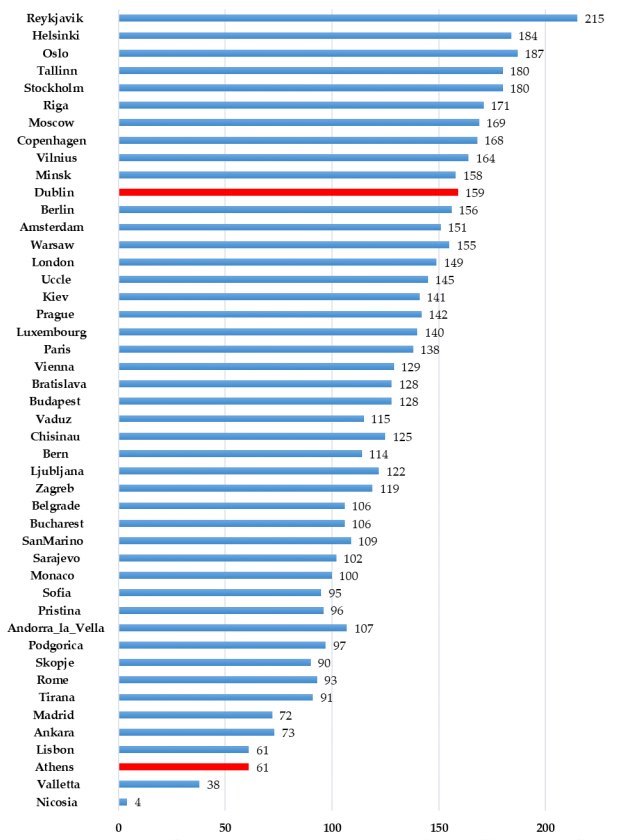

Fortunately, there is another source - the Sun - which is where vitamin D3 originally comes from, anyway. Getting enough from it is trickier than it seems, though. For a start, there is a concept called the vitamin D winter (archive) (MozArchive), meaning the part of the year during which the Sun doesn't generate any vitamin D:

And so, if you just happen to be deficient during the vitamin D winter (half a year or more for some regions), the only option remains supplements. But what if it's summer? Well, you still need to actually get outside, and today's office or homebound people might have a problem doing that every day during the proper hours (from 10 to 15, usually). Even if you do end up doing so, you need the proper clothing to generate enough vitamin D:

Though you can theoretically generate vitamin D in autumn or spring, people already cover themselves up too much during those seasons. So, if you want to rely on the Sun for becoming vitamin D replete, the short (for most regions in Europe or Asia e.g) period of summer is when you need to store enough for you to survive the vitamin D winter, during which your levels will be dropping every day. This is doable in principle, but not with modern lifestyles (try telling the hikikomori he has to be naked in the Sun for an hour every day). And again - if you're reading this during the other seasons - you can't afford to wait since the lack of vitamin D makes you susceptible to pretty much every health problem that exists.

It would be great if we could rely on the Sun as our only vitamin D source (hey, it's free; and cannot be affected by false advertising, or laced with who knows what additives) - and probably healthier for various reasons - but the vast majority of us will not be able to manage it in this day and age. Hell, if you look at the disease incidence chart - even Outdoor workers in late summer

and Tribal East Africans

do not reach 50 ng/ml. Do you think you can, with the Sun alone? However - with my newfound appreciation of mushrooms as a vitamin D source - the "weaknesses" of the Sun-only approach are much more managable. On the days you don't go outside enough (and during the vit D winter), just eat more of the dried mushrooms you've stored. On the days you do, you can take less or skip them altogether. This mix should make it viable to replace supplements, especially since the Sun generates vitamin D3, which should defuse the worries about the mushroom D2 being inadequate.

I do not like using those, and wouldn't even consider it for any other nutrient, as they are all relatively easily gained from a well prepared diet. With this one, it's pretty much impossible to get enough from food, regardless of how hard you try. So the Sun remains, except modern lifestyles also make this option pretty much impossible; and even if they didn't, it just doesn't shine strongly enough for half the year in many countries. As you can see, this situation is quite special.

You have to be careful with the supplements, though, because the companies are not your friends and are in it just for profit. So you can expect them to cut corners during production (archive) (MozArchive); resulting in less, more, or none of the advertised ingredient(s) - or even the additions of other ones that they think will give you the effects you're looking for:

Twenty-three of 57 products (40%) did not contain a detectable amount of the labeled ingredient. Of the products that contained detectable amounts of the listed ingredient, the actual quantity ranged from 0.02% to 334% of the labeled quantity

Seven of 57 products (12%) were found to contain at least 1 FDA-prohibited ingredient (Table). Five different FDA-prohibited compounds were found, including 4 synthetic simulants, 1,4-dimethylamylamine, deterenol, octodrine, oxilofrine, and omberacetam.

But, that was about sport supplements - what about vitamin D? Well, do you think that the health industry is somehow immune to this issue? Then you're in for a surprise (archive) (local):

One of the supplements (vitamin and mineral Formula F; Table 1) was purported to contain 1600 IU (40 mcg) vitamin D, 99% as D3. Analysis by UV spectrophotometry and HPLC revealed that each capsule contained a significantly higher amount of 186,400 IU (4,660 mcg) vitamin D3

In addition to this manufacturing error, there was an error in labeling recommending 10 capsules instead of one capsule per day. Thus, the patient consumed 1,864,000 IU (46,600 mcg) of vitamin D3 daily for 2 months, more than 1,000 times what the manufacturer had led the patient to believe he was ingesting.

Heh. By the way, he didn't die. No kidney failure or any of those other monsters the mainstream keeps scaring us with, either. But that isn't the point. The point is, you can easily fall into a trap in this unregulated market. So, just be careful is all I'm saying. Try buying from a reputable company, if that even exists. This site seems good for recommendations. But remember, that nothing is totally without risk. And the risk of being hurt by vitamin D starvation is surely incomparably higher than what happened to this guy (still, no long term damage with these cosmic dosages!). By the way, this issue seems to be mostly USA-specific; a while back an independent testing company checked many of the Vit D supplements available in Poland, and they were all correctly labeled and without random unlisted crap thrown in there. But again, the only way to be really sure that you won't harmed is to rely on the Sun.

If you decide to use a supplement, drops are more effective than tablets and capsules (archive) (MozArchive) in raising blood vitamin D levels:

Changes in 25(OH)D per mcg D3 administered, based on the results of third-party analysis, were TAB = 0.068 ± 0.016 ng/mL 25(OH)D/mcg D3; DROP = 0.125 ± 0.015 ng/mL 25(OH)D/mcg D3; and CAP = 0.106 ± 0.017 ng/mL 25(OH)D/mcg D3

Another nutrient in which it is very easy to become deficient. According to this UK nutrition database, all meats, eggs, milk products, nuts, seeds, grains (and their derivatives, like breads), herbs, spices, and oils contain a big fat zero of it. So you're left with fruit and vegetables. If you think you're covered because you eat your "five a day" (or whatever), I'll sadly have to douse that fire. I used to think that too, but when I finally decided to check it out, I was shocked to discover just how few items are significant sources of vitamin C. Many commonly eaten fruit and vegetables (such as beets, carrots, celery, cucumbers, lettuce, onions, apricots, grapes, pears, plums, watermelon...) actually contain very little:

| Fruit | Vitamin C (mg per 100g) | Vegetable | Vit C |

| Apple (unpeeled) | 20 | New potato | 16 |

| Apple (peeled) | 14 | Old potato | 11 |

| Apricot | 6 | Asparagus | 12 |

| Avocado | 6 | Aubergine | 4 |

| Banana | 11 | Beetroot | 4 |

| Blackcurrant | 200 | Broccoli | 87 |

| Cherry | 11 | Brussel sprout | 115 |

| Clementine | 54 | Cabbage (white) | 35 |

| Currant | 0 | Carrot | 35 |

| Damon | 5 | Cauliflower | 43 |

| Date | 14 | Celery | 8 |

| Gooseberry | 14 | Chicory | 5 |

| Grapefruit | 36 | Courgette | 21 |

| Grape | 3 | Cucumber | 2 |

| Guava | 230 | Curly kale | 110 |

| Kiwi | 59 | Fennel | 5 |

| Lemon | 58 | Garlic | 7 |

| Fig | 1 | Gourd | 185 |

| Lychee | 8 | Leeks | 17 |

| Mango | 37 | Lettuce | 5 |

| Melon (cantaloupe) | 26 | Marrow | 11 |

| Melon (galia) | 15 | Mustard and cress | 33 |

| Melon (honeydew) | 9 | Okra | 21 |

| Watermelon | 8 | Onion | 5 |

| Nectarine | 37 | Parsnip | 17 |

| Orange | 54 | Pepper (chili) | 120-140 |

| Passionfruit | 23 | Plantain | 9 |

| Pawpaw | 60 | Pumpkin | 14 |

| Peach | 31 | Radish | 17 |

| Pear | 6 | Spinach | 26 |

| Pineapple | 12 | Spring green | 180 |

| Plum | 4 | Swede | 31 |

| Raspberry | 32 | Sweet potato | 23 |

| Rhubarb | 6 | Tomato | 17 |

| Satsuma | 47 | Turnip | 17 |

| Sultana | 0 | Watercress | 62 |

| Tangerine | 30 | Yam | 4 |

Data for the table lifted from the above UK nutrition database. Only raw items were included, as adding up the ones cooked / processed in various ways, or mixed with other stuff, would make the table way too big and confusing. I think it's enough to realize that vitamin C is extremely heat-sensitive (local) (The most dramatic AA losses occurred during the cooking stage (mean loss 58%, standard deviation (SD) 19.5%, range 33 - 81%), with broccoli showing the greatest mean loss of 81% (SD 2.9%)

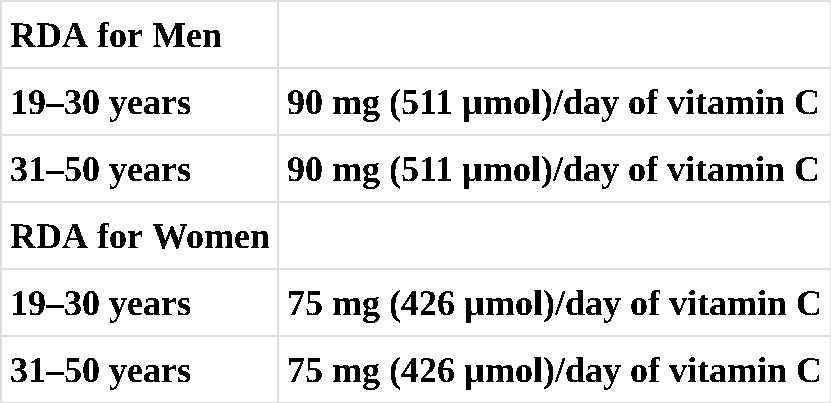

) so just cut half from the raw amounts to get the rough cooked ones. Storage time is another factor reducing the vit C levels; two weeks of keeping chili peppers at 5 degrees Celsius temperature (similar to what a fridge has) kills over half of it (archive) (MozArchive). Realizing this, we should already suspect people might have a problem reaching an optimal level. But first, let's see what the Institute of Medicine actually considers (archive) (MozArchive) adequate:

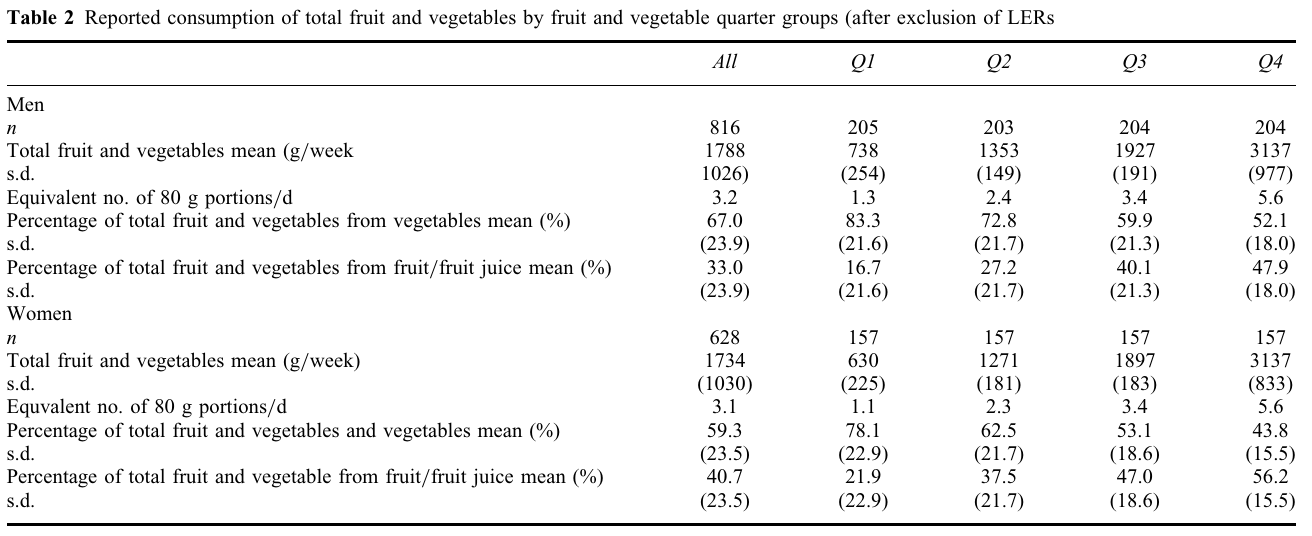

Wow! That should be easy...takes just about 300g tangerines or a little under 200g oranges (so three-four tangerines - or one orange - should suffice). Still, even that's probably beyond most people eating a "regular diet". Hey, let's analyze the food consumption of the Brits (archive) (local) (just to be consistent with the food database) and see if my assumption actually checks out:

The lowest quarter of fruit and vegetable eaters ingests only about 100g of these foods per day. So, even if they were in the highest tiers of vit C contents, it still wouldn't be enough to reach even the compromised Institute of Medicine's recommendations. But of course, their entire intake does not consist of only blackcurrants and chili peppers, so they are surely missing the target by a lot. Yet even the group that ate the most fruit and vegetables in this study only managed to muster a little over 400g per day. They would end up hitting the official vit C recommendations if their intake consisted of only items with 30mg+ of vit C per 100g, but of course - knowing how actual people mix different foods (some of the space will be taken by stuff like cucumbers, beets, etc. that have barely any vit C), they will also miss the target or just barely reach it. Anyway, the researchers have also measured the actual vitamin C intakes, so let's see if my analysis actually has anything to do with reality:

| Men Q1 | Men Q4 | Women Q1 | Women Q4 |

| 42mg | 107mg | 36mg | 106mg |

Yeah, that's about what I expected. Really what I am mostly surprised about is that Great Britain, the country with widespread "5 A Day" propaganda, ingests so little fruit and vegetables despite it. But the resulting vit C levels were easily guessable considering the amount commonly eaten plants have, and people's propensity for eating cooked rather than raw. So anyway, only the highest fruit and vegetable eating quarter has managed to jump over the very low bar of the official recommendations. The others fail to clear even that minor hurdle. Meaning that 75% of Brits are vitamin C deficient even according to the compromised Institute of Medicine's recommendations. I think you can guess where I'll be going with this now. Of course, it's time to analyze the basis for the official recommendations in the first place. Quoting from the Institute of Medicine's report cited at the beginning:

Based on vitamin C intakes sufficient to maintain near-maximal neutrophil concentrations with minimal urinary loss, the data of Levine et al. (1996a) support an EAR of 75 mg/day of vitamin C for men.

I'm starting to see a pattern forming here. Once again only one function of a nutrient is considered (just like with iodine and vitamin D) - but this time, not even full function but a near-maximal

(80%) one. They had a problem with 60 percent of maximal ascorbate concentration in neutrophils

providing less antioxidant protection than 80 or 100 percent

- but somehow 100% was too much, so they arbitrarily decided on a midpoint

. And as usual, the tricksters have not bothered to justify focusing only on neutrophil concentrations

. In fact, they spend a lot of time citing evidence of much higher vitamin C intakes having positive effects, such as:

Singh et al. (1995) found that the risk of coronary artery disease was approximately two times less among the top compared to the bottom quintile of plasma vitamin C concentrations in Indian subjects (the top quintile here had almost 3 times higher blood vitamin C than the lowest one)

Follow-up Study cohort of more than 11,000 adults showed a reduction in cardiovascular disease of 45 percent in men and 25 percent in women whose vitamin C intakes were approximately 300 mg/day from food and supplements

In a case-control comparison of 77 subjects with cataract and 35 control subjects with clear lenses, vitamin C intakes of greater than 490 mg/day were associated with a 75 percent decreased risk of cataracts compared with intakes of less than 125 mg/day

Similarly, vitamin C intakes greater than 300 mg/day were associated with a 70 percent reduced risk of cataracts

A series of small, clinical experiments reported that vitamin C supplementation of 2 g/day may be protective against airway responsiveness to viral infections, allergens, and irritants

Then they trash it all and say "you need only 75mg, plebeian, because neutrophils are all that matters and we need you inside the medical system, so don't you dare ingest anymore". And that's what finds its way into the official recommendations. Barely anyone will read the actual justification and find out it's baseless. But why do they "officially" do that? Let's see them try to explain themselves:

Although many of the above studies suggest a protective effect of vitamin C against cataracts, the data are not consistent or specific enough to estimate the vitamin C requirement based on cataracts

Although many of the above studies suggest a protective effect of vitamin C against asthma and obstructive pulmonary disease, the data are not consistent or specific enough to estimate the vitamin C requirement based on asthma or pulmonary disease

Supposedly, the evidence just isn't consistent or specific

enough. But when would it be? When you could find a single intake which absolutely everyone was proven to benefit from in the same way? And if you found a single person who doesn't, well then suddenly this amount must be completely useless? This simply isn't how biology works, though. Requirements hugely differ between people and even in the same person at different times. One of the factors that massively increases vitamin C needs is current infectious disease (archive) (MozArchive) - The initial mean +/- SEM baseline plasma ascorbic acid concentration was depressed (0.11 +/- 0.03 mg/dl) and unresponsive following 2 days on 300 mg/day supplementation (0.14 +/- 0.03; P = 1.0) and only approached low normal plasma levels following 2 days on 1000 mg/day (0.32 +/- 0.08; P = 0.36)

[...] We confirmed extremely low plasma levels of ascorbic acid following trauma and infection

. So, the disease was rapidly wasting their vitamin C stores, and 1g per day was required to even attempt refilling them to normal levels. Imagine what would have happened to these people if they followed the IoM's recommendations (the reaper can be heard sharpening her scythe somewhere close...).

In a set of 100 random people, the few particularly healthy ones might do relatively okay with less than 1g vit C intake (though I don't believe this is optimal for anyone). Most might fall in the 1g-2g range, and some will need multiple grams. Yet the IoM has targeted its recommendations at only the least needy. If they were designing staircases, they would make them only for the double-stair clearer by leaving a hole where every second stair would usually be. And the wheelchair-bound? They would be left completely on ice. This is the exact opposite of how nutrient requirements should be decided; all possible functions should be covered by the recommended amount. If there is even a tiny clue somewhere that more might still do something, then it should be assumed that it does. Then, double it just to be safe. After all, if you give too much, all that happens is that the excess is excreted (at least in the case of vit C, but most other nutrients follow this rule; you need to ingest many many times more than the need to even begin possibly hurting yourself). On the other hand, if you give too little, you get a disease and might even die. Of course, no one can be so stupid as to think that doing it the way the IoM does is sane - especially not such a big organization. Hey, they found all the evidence of vit C benefits for me, they just decided to ignore it later. They know very well what they are doing, and your health is simply not their goal! They basically admit it directly here:

Kallner et al. (1979) previously reported that the body pool of vitamin C was saturated at an intake of 100 mg/day in healthy non-smoking men; thus, an average intake at the EAR of 75 mg/day would not provide body pool saturation of vitamin C.

So, 100mg "saturates the body pool", but they will only give you 75. If that isn't an admission of malice, then what is? Since even according to the Institute's own cited evidence, 2g per day provides protection against lung diseases, why not set our base requirements as at least that? If some need less than this and end up receiving a little more, then so be it; the point is to give enough so that everyone's needs are satisfied. After all, it's better to over-help someone than under-help. Anyway, this base would be corroborated by the fact that other animals which (like us) can't make their own vitamin C, ingest comparable (archive) (MozArchive) - or even higher - amounts in their diets:

[...] dietary intake of vitamin C reported for gorillas (Gorilla gorilla) is 20–30 mg/kg/day, for spider monkeys (Ateles geoffroyi) is 106 mg/kg/day, and for howler monkeys (Alouatta palliata) is 88 mg/kg/day; similar considerations are valid for bats, with Artibeus jamaicensis consuming 258 mg/kg/day

20-30 mg/kg/day

turns into 1400-2100mg for a 70kg person. I think this suggests that our bodies expect at least this much regularly. And this intake is from the animal ingesting the least vitamin C from the list; following the others would require 7g per day or more. This doesn't mean that we necessarily need that much, but it surely means that this amount is not toxic at all and that we should aim higher rather than lower. The animals who make vitamin C also make gram doses (archive) (MozArchive) of it (The liver of goats has been estimated to produce between 2-4 g of vitamin C per day

), further corroborating this kind of intake as the minimal amount for humans.

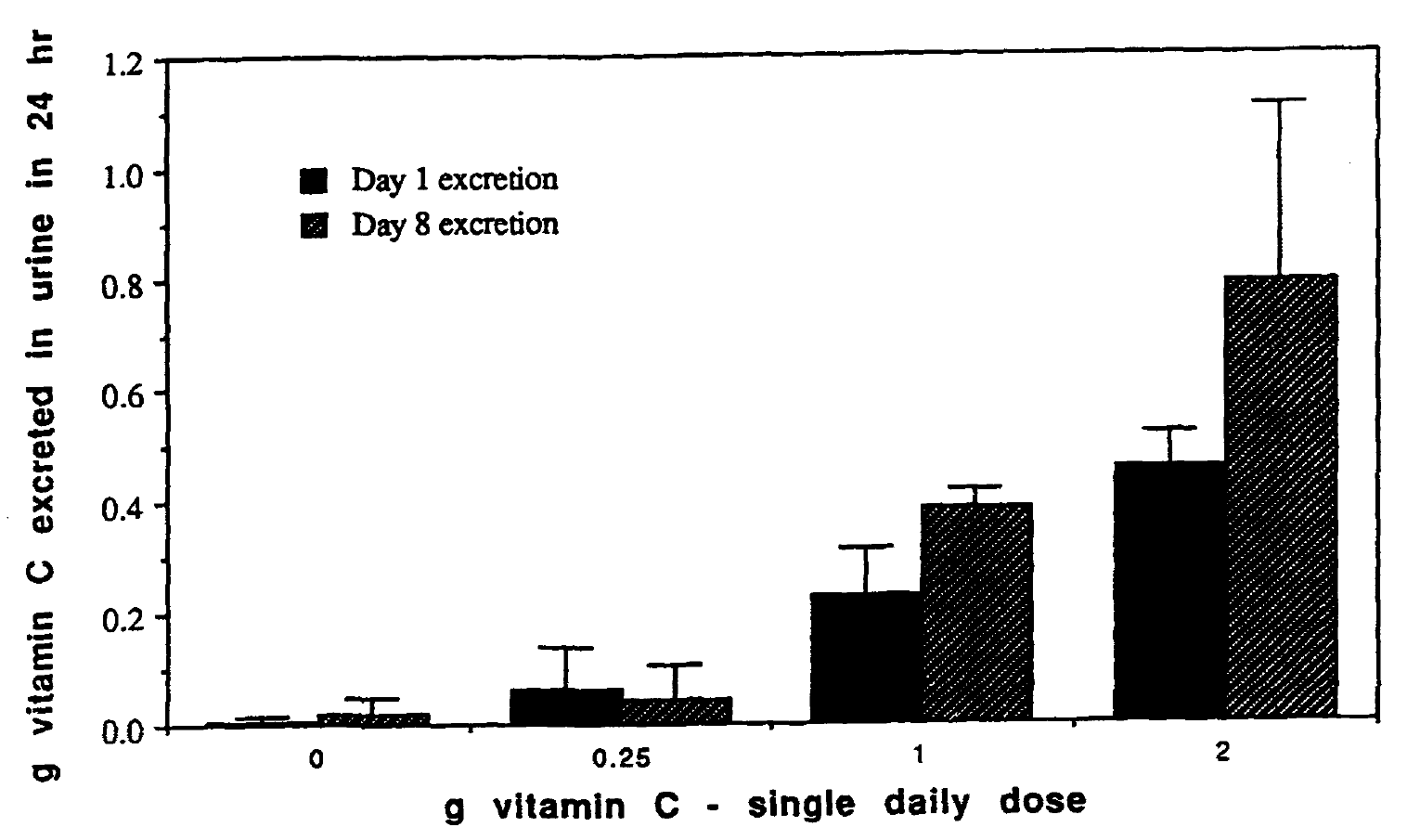

Urine excretion studies (archive) (MozArchive) (local) provide another piece of evidence that we need gram doses of vitamin C:

If you take 1g for 8 days, 400mg of that (40%) will be urinated away. But, if you add another gram to that, 800mg will be excreted - still only 40%! Don't you think that - if the body didn't want anymore - it would urinate away the entire excess, increasing the percentage? But no, the relative loss remains the same at a 2g intake as at 1g, therefore the daily need is at least that much, but probably more. To find the actual requirements, the dose should probably be increased until the entire addition is excreted - but they did not test more than 2g in this study. In 1981, Robert Cathcart - one of the vit C using doctors - came up (local) with a way to find your own optimal intake called the "bowel tolerance method", in which you take a little less than what's required to cause diarrhea:

The maximum relief of symptoms which can be expected with oral doses of ascorbic acid is obtained at a point just short of the amount which produces diarrhea, The amount and the timing of the doses are usually sensed by the patient. The physician should not try to regulate exactly the amount and timing of these doses because the optimally effective dose will often change from dose to dose. Patients are instructed on the general principles of determining doses and given estimates of the reasonable starting amounts and timing of these doses. I have named this process of the patient determining the optimum dose, TITRATING TO BOWEL TOLERANCE. The patient tries to TITRATE between that amount which begins to make him feel better and that amount which almost but not quite causes diarrhea.

...which - he discovered - will almost always be many grams (500mg was the lowest effective amount he found). Keep in mind he usually treated very sick people, so you will not necessarily need that much. But how to reach gram doses if you do in fact find out that you require them? Looking back at the British food consumption data, it is clear that a "normal" diet will bring us nowhere close to those amounts. In fact, the situation of regular people is really close to the vitamin D starvation pandemic I've already covered. But if you think they have it hard, consider how carnivore dieters are simply begging for the reaper to cut them down, since their only even mildly significant vitamin C source is liver at 19mg/100g, and no one will eat 500g of that every day (this also might result in a retinol overdose in a few months or less). And they can't rely on that 100mg amount to carry them through their entire life either way, since they will get injured or infected or somehow compromised at some point, and then that lowly amount will bring their demise.

Now imagine trying to get 20 times more than that for an amount that's actually protective against disease. Even eating 2kg of oranges - one of the highest vit C-containing fruit that's commonly eaten - would only provide about 1g. Juicing them would make it a little easier to ingest that much...with "only" about 1.5l of juice to drink. But that's annoying and obviously unsustainable. Yet I still do not like relying on supplements if it's not absolutely necessary; you have to trust a company to have put in there what it claims to, and deal with the additives, etc. Many (MozArchive) (archive) people (MozArchive) (archive) report (MozArchive) bad reactions (MozArchive) to synthetic vitamin C supplements. There is also evidence (archive) (MozArchive) that vitamin C from natural sources is utilized more effectively than synthetic - In contrast, the urinary excretion of ascorbic acid at 1, 2 and 5 h after ingestion of acerola juice were significantly less than that of ascorbic acid

. My favorite solution is acerola cherry powder (archive) (MozArchive) which contains 500mg vit C in one tiny spoon (some might contain even more; I don't care about the brand but it was the first one I found that listed the amounts). But if you lack access to that or don't want it, the chemical version should - once again - be better than an almost-complete vitamin C starvation that the "regular" diet provides. And you should still be drinking juice of various fruit and vegetables since there's still a significant amount of vit C (plus other useful things, and it tastes nice) in there.

In summary, several pieces of evidence support ingesting gram doses of vitamin C:

Paleolithic humans are likely to have averaged about 600mg vitamin C--within the spectrum of contemporary estimates, but toward the higher end; this isn't as much as I think is optimal, but is still 7-8 times more than the official requirement. Since our diets are worse in other aspects, and considering our higher stress levels, overworking, less sunshine, worse social connections and more electromagnetic radiation - we will need proportionately more.

Whichever way you slice it, we need much more than the ~100mg the Institute of Murderers Medicine has set as the requirement. In fact, 2g is - according to them - the Tolerable Upper Intake Level

, while in reality it's the bare minimum for restoring good health.

We are all likely deficient in selenium. Anti-cancer effects from eating 200 mcg per day have been found (local):

Data from the Nutritional Prevention of Cancer randomized trial have shown a significant protective effect of supplementation with 200 μg Se/d, as high-Se yeast, on cancer incidence and mortality with the most notable effect being on prostate cancer, with lesser effects on colorectal and lung cancers.

Another study (archive) (MozArchive) showed beneficial effects for diabetes:

A 200 μg/day selenium supplementation among patients with T2DM and CHD resulted in a significant decrease in insulin, HOMA-IR, HOMA-B, serum hs-CRP, and a significant increase in QUICKI score and TAC concentrations.

And yet, the average is a lot lower (archive) (MozArchive):

The level of selenium intake in Poland ranges from 30 to 40 µg/day [70]. In Spain, the intake of selenium is 44–50 µg/day, in Austria it is 48 μg/day, while in Great Britain it is 34 µg/day [30,78]

The best food source are brazil nuts, with 50 - 300mcg per nut (archive) (MozArchive). Meaning one per day should provide enough on average.

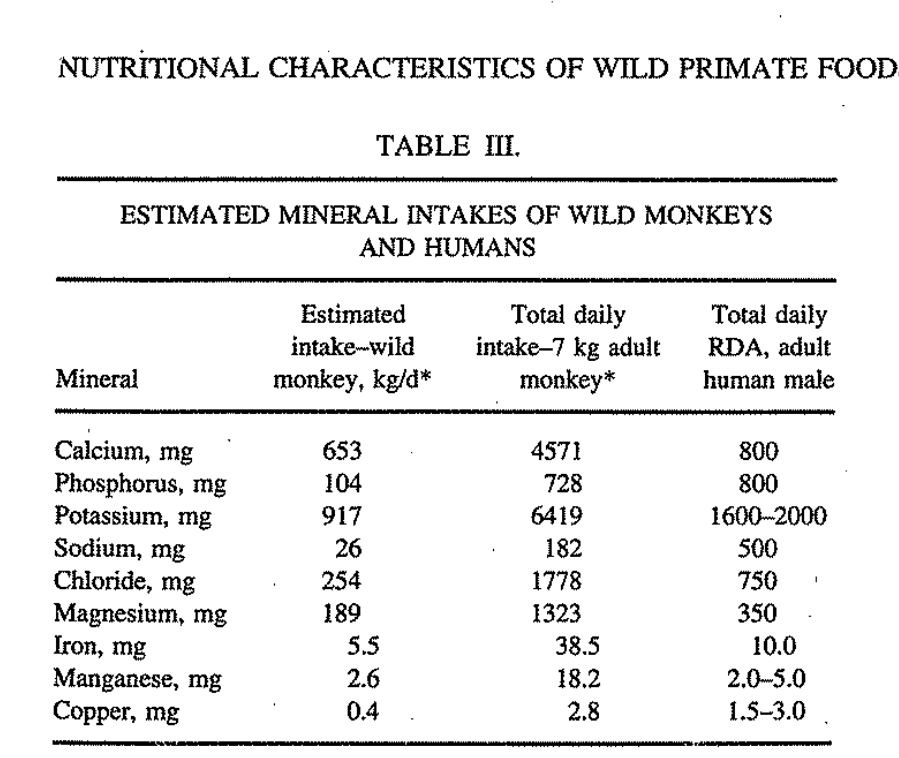

Scaled to weight, a wild howler monkey ingests 57 times more calcium and 38 times more magnesium (archive) (local) per day than what is recommended for us:

In that case, even massively increased amounts compared to what we ingest today shouldn't be harmful (they aren't for those monkeys) - and might be beneficial. Even if we assume that we metabolize magnesium 10 times better, that still leaves us with a 4 times increase that we probably should be getting. The commonly ingested averages (archive) (local) are very close to the recommended amounts, which seem inadequate:

This could explain why heart disease is the most common ailment of today's people, since magnesium is involved in a lot of heart functions (archive) (MozArchive).

Probably the most deficient nutrient in the usual diet, since the vast majority of common products (white bread, meats, oils, eggs etc) have very little of it. I'll let Jane Karlsson (archive) (MozArchive) (an expert on this topic) speak:

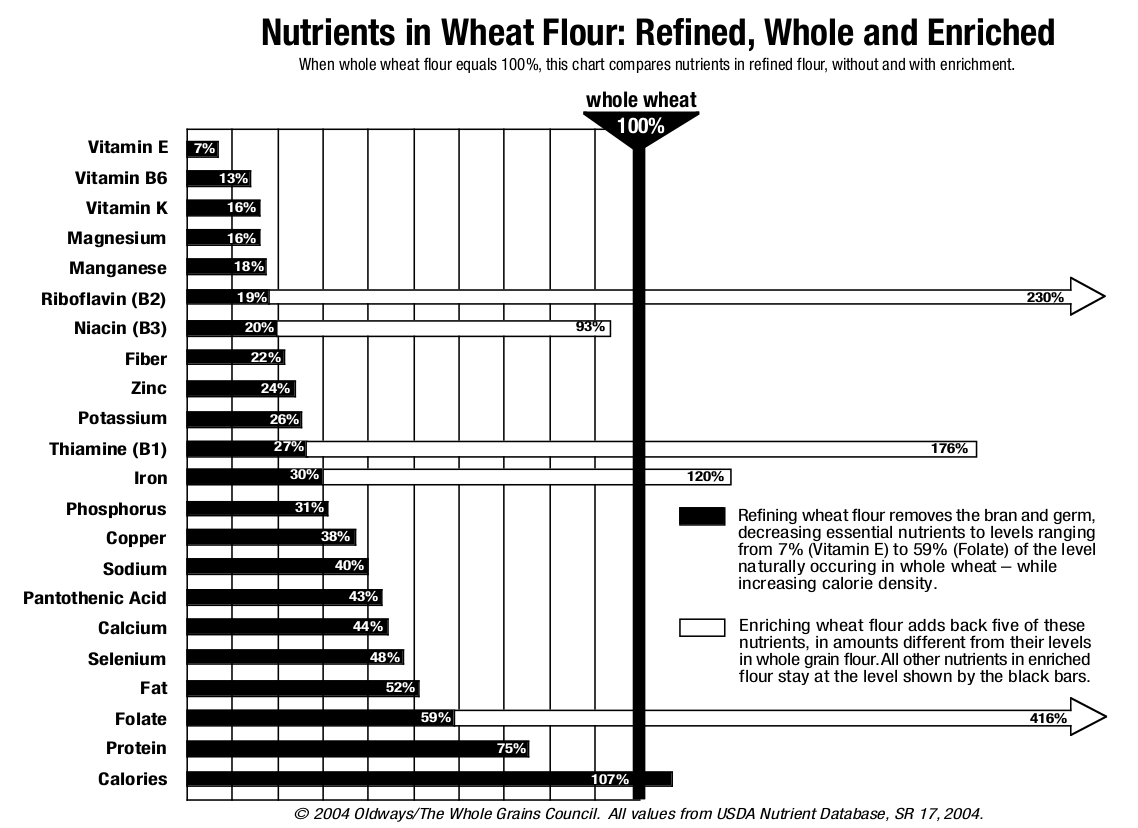

The western diet looks almost as if it was designed to be Mn deficient. Lots of animal foods which have hardly any and can be very high in iron; lots of saturated fat which inhibits Mn absorption and increases iron absorption https://ncbi.nlm.nih.gov/pubmed/11697763 and lots of refined carbs whose Mn has been removed and replaced with … iron.

The importance of Mn in cell biology can hardly be overstated. MnSOD actually prevents aging.

I find this astonishing. It means Mn deficiency is arguably the most important cause of all the age-related diseases we see today.

And from another thread (archive) (MozArchive):

The average manganese intake in the US is 2 mg/day. Surprisingly, the RDA is also 2 mg/day. According to the Linus Pauling Institute, it was decided on the basis of no evidence that the average intake was enough. It might be enough in a low-iron diet, but the western diet is very high in iron.

Manganese deficiency is implicated in diabetes, and the Ma Pi diet which apparently cures diabetes has 16 mg/day of manganese. This is 8 times more than the RDA. https://www.ncbi.nlm.nih.gov/pubmed/22247543

In some old studies, manganese containing items have been shown to manage diabetes better than insulin:

He was treated with soluble insulin and long-acting insulin intramuscularly in large doses (100-200 units daily), but he responded poorly.

With an interest born of despair, we allowed him to prepare in the ward an extract by boiling the green leaves of alfalfa in water, and to drink the infusion. By this time his blood-sugar level had reached 648 mg. per 100 ml. Two hours later, he had clinical signs of hypoglycaemia and his blood-sugar was 68 mg. per 100 ml. The test was repeated thereafter on twelve occasions, the alfalfa extract being administered when his blood-sugar varied between 190 mg. and 580 mg. per 100 ml. The infusion was also given at different intervals after food and at varying times of the day. On each occasion there was the same predictable hypoglycaemic response

With 10 mg. of manganese chloride orally three times a day, fairly satisfactory diabetic control was obtained and it was possible to discharge the patient on this regimen.

There wasn't a cure (but neither is insulin). Either way, a promising area of research that still hasn't been taken up, I think. Lots of unanswered questions in that paper, and overall. The authors thought that the patient wasn't deficient in manganese:

It followed, therefore, that the patient was absolutely or relatively deficient in manganese. As there was no dietary deficiency, and as the daily requirement of manganese is very small, an absolute deficiency seemed unlikely, although this possibility could not be entirely excluded, because of the observation of an increased loss of manganese in the patient’s urine.

But again, this is assuming that the official requirements have been correctly set (they haven't).

The single best source of manganese is pineapple juice, and if you drink that, there is no need to worry about being deficient. Otherwise, whole grains.

A similar trick was pulled here as with vitamin D. It was assumed (archive) (MozArchive) that iodine has only one job in the body, and if that job was fulfilled, the entire requirement for the day would be met:

Thyroidal radioiodine accumulation is used to estimate the average requirement. Turnover studies have been conducted in euthyroid adults (Fisher and Oddie, 1969a, 1969b). In one of these studies, the average accumulation of radioiodine by the thyroid gland for 18 men and women aged 21 to 48 years was 96.5 μg/day (Fisher and Oddie, 1969a). The second study involved 274 euthyroid subjects from Arkansas. The calculated uptake and turnover was 91.2 μg/day (Fisher and Oddie, 1969b).

And those two studies from 1969 (!) have decided the human iodine requirements apparently for all time. And yet the same document says:

Observations in several areas have suggested possible additional roles for iodine. Iodine may have beneficial roles in mammary dysplasia and fibrocystic breast disease (Eskin, 1977; Ghent et al., 1993). In vitro studies show that iodine can work with myeloperoxidase from white cells to inactivate bacteria (Klebanoff, 1967). Other brief reports have suggested that inadequate iodine nutrition impairs immune response and may be associated with an increased incidence of gastric cancer (Venturi et al., 1993).

So - if iodine also has immune boosting and anti-cancer effects, then why are only its thyroid-related jobs considered for determining adequate intakes? Again, Thyroidal radioiodine accumulation is used to estimate the average requirement

. But hey, it's now 2024, and we have more direct evidence that iodine is involved in beating infectious disease (archive) (local), for example:

In the lungs of KI[potassium iodide]-treated lambs, we found reduced hRSV A2 RNA levels and a reduction in gross and histological lesions compared with untreated lambs infected with hRSV A2. After 6 days of I 2 supplementation, treated lambs had significantly increased [I2] in tracheal ASL compared with nonsupplemented control lambs. These findings suggest that I2 administration is associated with reduced RSV disease severity.

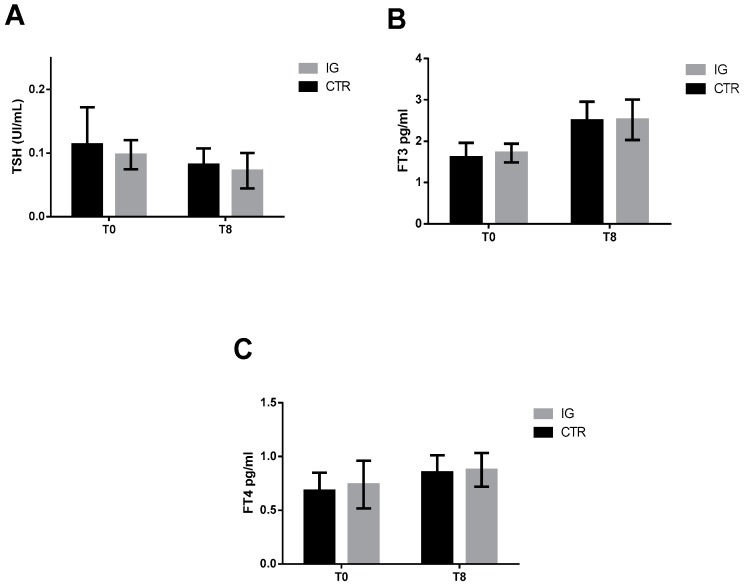

Another study (archive) (MozArchive) confirmed not only the immune-supporting effects of increased iodine intakes...

The highest enriched pathway was “Fc gamma R-mediated phagocytosis” (FDR: 2.63 × 10−6), which was associated with clearance of opsonized particles, suggesting that iodine supplementation could help the immune system against invading bacteria

...but also the complete safety of it:

All animals maintained a good state of health for the entire duration of the trial (8 weeks)

No destruction of the thyroid done by iodine (the mainstream's favorite boogeyman), and in fact the hormonal profile was better in the supplemented group. Maybe you're wondering how much did they actually use - wonder no more:

During the 3-week acclimatization period (21 days), both the control (CTR) and the experimental iodine groups (IG) received a basal diet that mainly consisted of alfalfa hay plus a custom-formulated concentrate supplemented with 20 mg/day/animal I in order to guarantee the daily micronutrient requirement for each animal; then, the IG animals were fed for 56 days (during April and May) with a custom-formulated concentrate supplemented with additional 65 mg/day/animal of I in order to obtain a total intake of about 85 mg

The exact weights of the cows used in this study are not listed, but generally, the Friesian cow appears to weigh a little over 700 kg (archive) (MozArchive) on average. Scaled to weight, the human intake would be about 6-10mg (several times what the Institute of Medicine thinks is the threshold for toxicity, and dozens of times more than the supposed daily requirement).

All of this puts the whole Covid situation into a different light. The seafood-eating Japanese had an extremely low death rate (archive) (MozArchive) of 0.22% at an iodine intake of 1-3 mg per day (archive) (MozArchive) (By combining information from dietary records, food surveys, urine iodine analysis (both spot and 24-hour samples) and seaweed iodine content, we estimate that the Japanese iodine intake--largely from seaweeds--averages 1,000-3,000 μg/day

).

So how much iodine should we be getting? This study (local) appears to put the daily requirement for the optimal function of only the thyroid at at least somewhere between 201 and 300ug:

We attempted to evaluate optimal iodine intake and investigated changes in the iodine intake of the Styrian population during the last 13 years. Our results from euthyroid patients show that TSH values are lowest at an iodine excretion rate between 200 and 300 μg of iodine per gram of creatine. In this group, Tg levels were low as well. From this we conclude that an optimal daily intake of iodine is approximately 250 μg.

But it could easily be more, because the symptoms traditionally used to judge thyroid function (archive) (MozArchive) (Arthritis, irregularities of growth, wasting, obesity, a variety of abnormalities of the hair and skin, carotenemia, amenorrhea, tendency to miscarry, infertility in males and females, insomnia or somnolence, emphysema, various heart diseases, psychosis, dementia, poor memory, anxiety, cold extremities, anemia, and many other problems were known reasons to suspect hypothyroidism.

) have not been examined in this study. Still, at least we have confirmation that the TSH doesn't rise with the higher iodine intakes as it does in hypothyroidism (MozArchive) - A high TSH level—above 4.5 mU/L—indicates an underactive thyroid, also known as hypothyroidism. This means your body is not producing enough thyroid hormone. [...]

. And remember, this is only considering iodine's thyroid jobs, when we already know it has more. And since even the compromised Institute of Medicine admits 1mg per day is non-toxic, we might as well aim higher and replicate the Japanese; the safety of this is corroborated by the previously mentioned animal experiments. However, we don't know how much we actually need, as the research to discover true iodine requirements in humans has not been done. The only study I have found that actually compares different dosages in humans, was this one (archive) (MozArchive) about women with some breast disease. And guess what:

Patients recorded statistically significant decreases in pain by month 3 in the 3.0 and 6.0 mg/day treatment groups, but not the 1.5 mg/day or placebo group; more than 50% of the 6.0 mg/day treatment group recorded a clinically significant reduction in overall pain. All doses were associated with an acceptable safety profile. No dose-related increase in any adverse event was observed.

This not only corroborates the safety of milligram doses, but also appears to show that the multiple-mg amounts might actually be what we need, at least when disease is concerned. How much this applies to men or women without such issues, I have no idea. What I do have an idea about is how common iodine deficiency is in countries such as:

Median urinary iodine concentration (UIC) among adults (n = 1092) was 93.6 µg/L, indicating mild iodine deficiency according to WHO thresholds.

The mean urinary iodine excretion in our cohort was 109 +/- 81 microg/g level indicating a borderline adequate (meaning "right on the verge of deficiency") iodine intake (100-200).

The percentage of participants with excretion below the RDA was 5.5% in men and 9.1% in women), despite its affinity for iodine-rich seaweeds.

It is also important to realize that using the mean to judge iodine deficiency in a population is flawed. Because, some people will score (way) above it, and some below. Then, those few that have ingested a lot of iodine will mask the many that got too little. This is exactly how they hid the extreme rate of iodine deficiency in that Germany study. Because if you read the entirety of it, you find this quote: Moreover, 283 (36%) probands had mild iodine deficiency with urinary iodine excretions between 50–99 µg/g

. Yet the mean was borderline adequate

...while 36% of people were deficient...hahaha. The same issue (but even more severe) exists in the Belgian study, where 56% of the participants actually had a urinary iodine excretion below 100 µg/L (deficient). And it's easy to see why this is so, as the common diet contains basically no iodine. Fruit, vegetables, nuts, seeds, grains, and spices all have none or very little. Land meat has some but not enough (you'd need to eat about 2-3kg of it per day to fulfill the official iodine requirements with it alone). Some people look at the nutrition tables and notice that milk has a lot, so they dismiss the issue. But it actually might not be that good of a source (archive) (MozArchive) because:

Milk iodine concentrations in industrialized countries range from 33 to 534 μg/L and are influenced by the iodine intake of dairy cows, goitrogen intake, milk yield, season, teat dipping with iodine-containing disinfectants, type of farming and processing.

Teat dipping with iodine-containing disinfectants

...seriously? Anyway - unless you're sure that the cows in your area are having that teat dipping

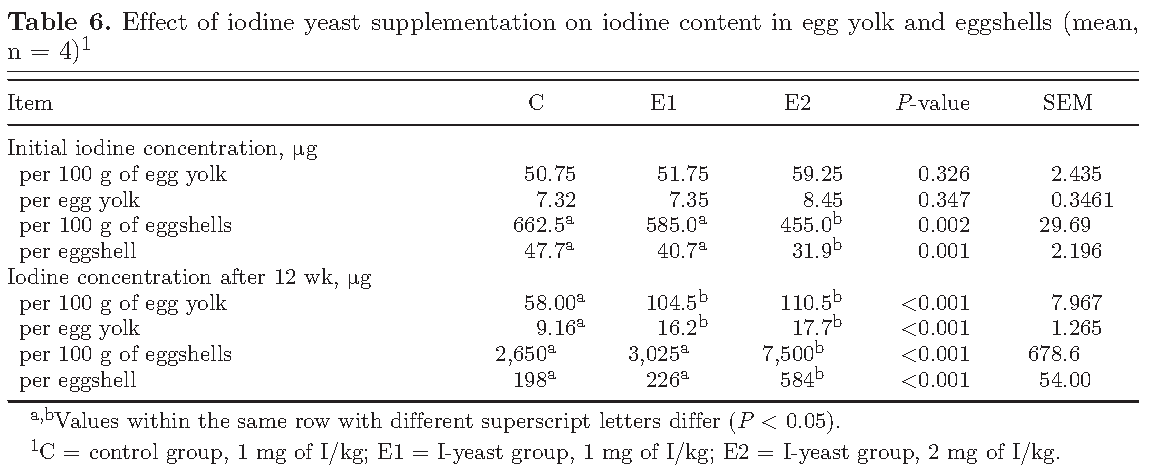

done - you shouldn't rely on milk. Eggs just aren't a good source either way; even if the hens are supplemented with iodine, the content is still low (local):

The hatching eggs (n = 10) had I concentrations of 687 ± 170 μg I/kg in the KI experiment and 484 ± 82 μg I/kg in the Ca(IO3)2 experiment.

Since an egg weighs about 60g, even assuming the highest recorded value of 857 μg iodine per kg of eggs results in only about 50μg of iodine per egg. Another experiment (local) confirms this:

And remember, that these were iodine-supplemented eggs; "normal" ones contain half the iodine. Meaning you'd need to eat 6 eggs per day just to hit the official requirements. Therefore, you cannot realistically reach therapeutic intakes through either milk or eggs. Unless you eat the shells, which in this study ended up having quite a lot of iodine - even the ones from unsupplemented chickens. Yet I can't imagine too many people wanting to do that (and you'd need to eat five per day for just 1mg of iodine). But it gets worse; much of the iodine is - as expected from what happens to other nutrients - destroyed by cooking (archive) (MozArchive):

It was found that the mean losses of iodine during different procedures used was 1) pressure cooking 22%, 2) boiling 37%, 3) shallow frying 27%, 4) deep frying 20%, 5) roasting 6%, 6) steaming 20%.

Taking all of this into account, it's obvious that the average person cannot even sniff sufficiency through the common food items. The only thing saving most people from a complete iodine starvation (rivaling the ones for vit C and D) is the widespread use (archive) (MozArchive) of iodized salt:

The percentage of daily iodine intake from water, iodized salt, iodine-rich processed foods, and cooked food were 1.0%, 79.2%, 1.5%, and 18.4%, respectively.

Another example (archive) (MozArchive):

Median iodine intake met the European Food Safety Authority adequacy level only in teenagers in the highest quartile of salt consumption (salt intake > 10.2 g/day).

And another... (archive) (MozArchive):

Women living in households with adequately iodized salt had higher median UIC (for pregnant women: 180.6 µg/L vs. 100.8 µg/L, respectively, p < 0.05; and for non-pregnant women: 211.3 µg/L vs. 97.8 µg/L, p < 0.001).

Yet the WHO (archive) (MozArchive) and other medical institutions now recommend lowering your salt intake, therefore we can expect the iodine to fall off the cliff alongside it. Also, much salt is not iodized, so it's not that good of a source, either way. For example, in the USA (local):

Contrary to popular belief, the vast majority of salt in the U.S. diet is not iodized. Approximately 70% of the salt is used commercially-virtually none of the salt used by the prepared or the fast food industry is iodized. Approximately 70% of the remaining 30%, sold to consumers in grocery stores, is iodized, representing one-fifth of the total salt consumed (14, 71).

The UK has an even worse situation (archive) (MozArchive):

Iodised salt was available in thirty-two of the seventy-seven supermarkets (41·6 %). After accounting for market share and including all six UK supermarket chains, the weighted availability of iodised salt was 21·5 %. The iodine concentration of the major UK brand of iodised salt is low, at 11·5 mg/kg.

It is clear that iodine deficiency is a really big problem, and that is even while assuming only the laughable official standards. The real iodine requirements - taking into account all of its jobs - are probably several times higher and could be hit only by the regular seafood eaters. Therefore, here we have yet another nutrient in which almost everyone is deficient. We just don't know how much we're lacking, and what are the exact effects at different intakes.

This nutrient isn't like vitamin D, however, and you can definitely get too much and hurt yourself. Though some people have benefitted from iodine megadoses, others have been wrecked by them. Even the megadose using doctors have found iodism (iodine poisoning) in a significant percentage of their patients - a positive correlation was found between those 2 parameters: zero percent iodism at a daily amount of 1.4-2 mg; 0.1% iodism with 3-6 mg daily; 0.5% with 9 mg and 3% with 31-62 mg

(this is from the book Iodine for Greatest Mental and Physical Health). So, I don't recommend the megadoses (but, if you look carefully, <=2mg was completely safe, and 3-6 almost so; I wouldn't go above that). If iodine works like every other nutrient, it's going to be used up more in disease states (there is some evidence (archive) for this - Tissue iodine levels were lower in gastric cancer tissue (17.8±3.4ngI/mg protein, mean±SEM) compared with surrounding normal tissue (41.7±8.0ngI/mg protein) (p<0.001)

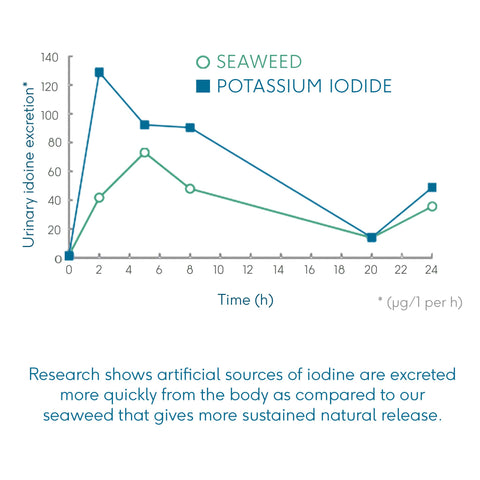

), which is the reason I suspect some can endure the megadose and some can't. Lacking companion nutrients is another possibility; selenium (archive) is a candidate. Worrying about iodine poisoning for the common person is totally pointless, though, as it's pretty much impossible to reach those dangerous amounts through food; the real issue is avoiding severe deficiency while eating the "regular diet". As usual I recommend food sources, which appear to be utilized better than supplements:

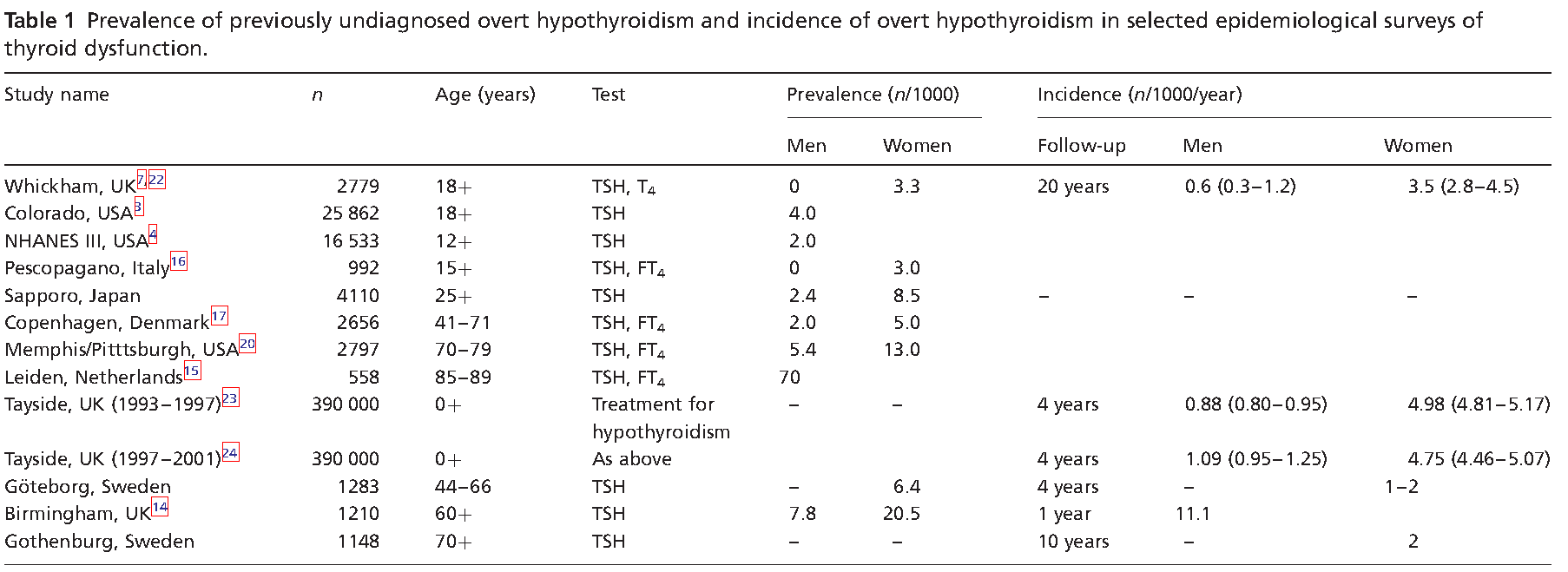

Here (archive) (MozArchive) is a table of the iodine contents in common seaweeds. As you can see, a few grams per day of most (dried) seaweeds is enough to receive significant amounts of iodine - way beyond what you could get from even a liter of milk or a dozen of eggs. Some might worry that a few of the seaweeds contain too much iodine; but the Japanese - who eat them freely - don't appear to be suffering from any additional issues over the other countries, which do not eat seaweed at all. For example, their rate of hypothyroidism (local) - that's supposed to skyrocket with high iodine intakes - is right in line with other countries:

Lots of missing data there, but it's enough to realize that countries like UK and USA have more hypothyroidism with close to zero seaweed intake. The incidence of thyroid autoimmunity is the same (local) in the various regions of Japan, despite significant difference in iodine intakes - It seems that the differences in dietary iodine intake (from several hundreds to several thousands µg/day) do not affect the TGAb and/or TPOAb positivity in Japan

. You can always, of course, limit yourself to below whatever level you think is too much.

This is definitely the best oil to use, and the others cannot even lick its boots. If I had to rate the oils in terms of healthfulness, the list would look like this:

Coconut oil has proven to have benefits against pretty much everything you can think of (archive) (MozArchive) (and even what you cannot think of). This probably means it is affecting some fundamental processes inside the body - Ray Peat has some ideas about that (archive) (MozArchive). On the other hand, you can barely find even one benefit for those other fats aside from providing a lot of calories. Olive oil does have some antioxidants but no proven beneficial effects in scientific studies as far as I can see (yes, I actually looked a while back), and the significant amount of PUFA might still be dangerous when fried. Butter is probably harmless but doesn't do much other than loading you up with calories and an irrelevant amount of a few vitamins. The reason other animal fats are worse is because they have more PUFA and it is actually the fat in an animal that is the storage organ for toxins. The only reason coconut oil isn't recognized as the elixir of Gods is because of the cholesterol scare (even though CO won't raise cholesterol by a relevant amount, or at all) that somehow can't die yet.

Quite a ruckus happened in natural health circles back in 2015 when Kaayla Daniel did independent testing and came up with a report exposing FCLO as a total scam (I don't necessarily agree with all the claims in there, though). Why am I bringing this up now, though? Well, this crap is still being marketed and sold as a health food. And the Weston A. Price Foundation still shills it (archive) (MozArchive) on its website, with fake lab tests to support it (this - in my opinion - makes everything written on that site suspect). This fraud is kind of unique in that literal rotting is twisted into some kind of a health promoting process. At least other frauds, like the overpriced vitamin C tablets, are usually harmless. On the other hand - if you read Kaayla's report - it proves that many toxic substances created by fish corpse decomposition exist in the FCLO. When testimonies started piling up (archive) (MozArchive) that this stuff was harmful to human health, the usual suspects doubled down instead of dropping the product.

Health matters not in a world based on the profit motive, except as an empty promise from the product manufacturer. Except with FCLO, it's even worse than usual because not only has the promise not been fulfilled, it's been completely reversed when it became obvious that people's health had been sacrificed under the altar of the almighty dollar. You pay almost 40 US dollars (archive) (MozArchive) for a bottle of something that poisons you and eventually kills you (archive) (MozArchive). Imagine how many people were spending fortunes on this rotten poison pretending to be a health food, hoping to fix their health problems as they had been promised - only to get cancer or heart failure and die. All while the sellers and the shills raked in the cash. What a cruel joke.

When are people finally going to recognize the profit motive as the main contributor to such situations? I mean, Green Pasture and the WAPF shills have discovered a gold mine with this product and are not going to abandon it (though, some WAPF members (archive) (MozArchive) did leave the organization once they realized what's up, showing that ethics prevail at least sometimes), knowing how much power money gives you in this world. It's not that most or even a significant amount of people are inherently evil, but that the world rewards evil behaviors like fraud by giving the perpetrators a lot of money to allow them to cease working and not worry about survival; as well as enjoy stability, high social status, trips, premium items, etc. I bet that a lure this attractive pulls in a lot of fish.

I don't think that all the nuances of this product have been figured out. We don't really know how much of the relevant vitamins are actually in it. Not all of the samples were rotten; we don't know why that is so, either. Maybe they were adulterating them with other oils or safer production processes were figured out later. But there's too much secretiveness on the part of the manufacturers, which is expected when profit motive is involved. And, people have already died from this, so it's simply practical to avoid it, either way. Even if there weren't any production issues, etc. the fish liver (from which the FCLO is made) still contains an unphysiological amount of PUFA for humans. Find your vitamins elsewhere, like the Sun and the juice.

These posts (archive) (MozArchive) have engraved themselves in my mind since I read them:

Recently Per Wikholm’s old friend and co-author came out with a book about beans and resistant starch in Sweden. He wrote it with a guy who suffers from T1D. The guy simply started eating 1/2 cup of beans with every meal, and this caused ridiculously stable blood sugars, which he didn’t have even on low carb.

I’m reading the book now. The T1D guy was on 100 units of insulin a day doing low carb. After adding the beans to each meal he’s down to 20 units a day (the authors comments that this is counterintuitive, adding carbs gives less insulin). Some days he can go completely without “food insulin”, only taking the baseline insulin. He also lost lots of weight.

An insulin dependent diabetic being able to drop 80% of his insulin dosage is just insane. This is in comparison to a low carb diet, remember - proving that the latter is not the best approach for diabetes. Modern medicine cannot touch those results, either.

Speaks for itself. Please remember that all junk food, white bread and white rice is refined.

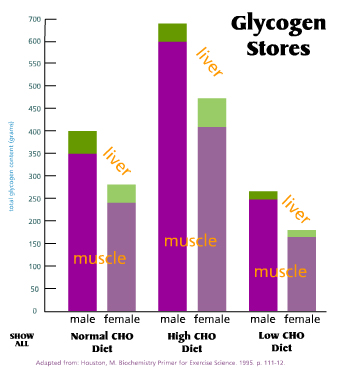

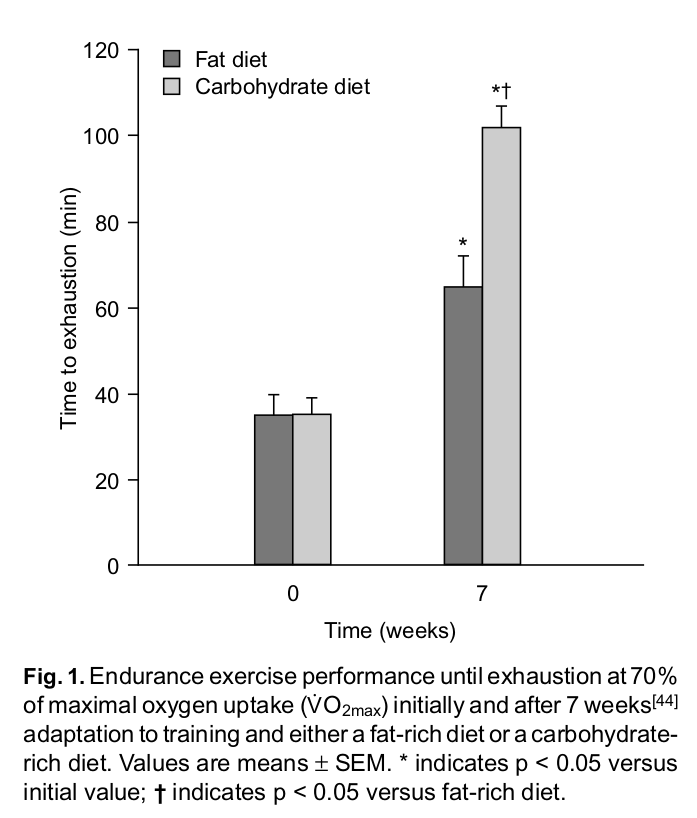

They are unsustainable, pointless, and dangerous. You are required to remove from your diet food groups that are not only harmless but very beneficial. Whole grains, fruit, beans, potatoes and honey - for example. Certainly anything close to a "normal" life will not be possible anymore. You are pretty much left with just meat, eggs, butter, cheese, nuts and some vegetables. But even the nuts and vegetables have to be curtailed (archive) (MozArchive)

Be more careful with slightly higher-carb vegetables like bell peppers (especially red and yellow ones), brussels sprouts and green beans to stay under 20 grams of carbs a day. The carbs can add up. For instance, a medium-size pepper has 4-7 grams of carbs.

You really need to autistically count the carbohydrates (and protein) in everything that passes your lips, every second of every day. Even that, seemingly, isn't enough (archive) (MozArchive) to bring about ketosis. Wow, that link is really gold. Someone was eating all the right keto foods, yet still wasn't in ketosis when he measured his ketones. So he had to drop the keto angel broccoli. Hahahaha...oh wait, it's not funny. You're denying yourself everything and yet still can't reach your prized ketotic state. And of course, if you dare to put a few slices of bread in your mouth, it's back to square one...for a week (archive) (MozArchive). Do you know why it works like this? Because ketosis is fucking unnatural (to clarify, the only reason it even exists as a metabolic state, is so that our energy-intensive brain can keep itself running during long-term starvation)! If you look at hunter-gatherer groups (current or extinct), not a single one of them is in ketosis - not even the Inuit (local) - Yet seminal studies carried out on Inuit subjects in the early twentieth century yielded surprising results from a metabolic perspective. Low ketone bodies in the breath and urine were observed in the fed state [...] These results suggest that the traditional Inuit diet may not actually be ketogenic, as commonly assumed, despite being very low in carbohydrate

. Yet the keto cultists will try everything to reach that state for no reason, including guzzling butter (archive) (MozArchive) or eating these keto delicacies: